Adaptive RAG in ZBrain: Architecting intelligent, context-aware retrieval for enterprise AI

Listen to the article

Large Language Models (LLMs) like GPT-4 have impressive capabilities but are limited by static, pre-trained knowledge. They cannot natively handle information beyond their training cutoff or specialized domain facts. Retrieval-Augmented Generation (RAG) addresses this limitation by fetching relevant external information at query time and supplying it to the LLM as context. In a typical RAG pipeline, when a user asks a question, the system searches a knowledge source (e.g., documents, databases, or the web) for supporting data and then appends those results to the prompt for the LLM to generate a grounded answer. This approach enhances factual accuracy and keeps responses up-to-date without requiring retraining of the model, thereby effectively mitigating knowledge gaps and reducing hallucinations.

In conventional implementations, this retrieve-then-generate process is static. The system performs a retrieval step for every query, regardless of its simplicity or the extent to which the model’s internal knowledge covers it. For example, even a straightforward question like “What is the capital of France?” would trigger a database or vector search, despite the fact that most LLMs already know the answer. This one-size-fits-all strategy can waste resources on trivial queries and add unnecessary latency. Moreover, always injecting retrieved text as context carries the risk of distracting or confusing the LLM if the fetched documents are irrelevant or noisy. Paradoxically, the very mechanism meant to enhance answers can degrade them when misused.

This is where an emerging need for adaptive RAG is evident.

Real-world queries vary greatly in difficulty and information needs. Constantly performing retrieval regardless of necessity is inefficient and sometimes counterproductive. Simple questions that the LLM can answer from memory incur needless overhead in searching and processing external data. Meanwhile, truly complex or novel questions often require a more sophisticated approach than a blind search – for instance, breaking the query into subparts or iteratively refining the search terms. These observations have sparked interest in making RAG adaptive, i.e., dynamically deciding when and how to retrieve additional information on a per-query basis, rather than always following a fixed retrieval routine. An adaptive system aims to “triage” the user’s query upfront: if the question appears answerable based on the model’s own knowledge, it skips retrieval (saving time and cost); if not, it engages the full retrieval pipeline. This selective retrieval can maintain accuracy while eliminating redundant work, thereby improving efficiency.

Another motivation for adaptivity is to avoid introducing irrelevant context. If a query doesn’t actually need external info, performing a search might return loosely related text that, when fed into the prompt, could confuse the model or lead to tangential answers. By only retrieving when warranted, an adaptive RAG system minimizes the chances of adding irrelevant or harmful context.

A special case where retrieval is often necessary is when queries involve time-dependent information. A knowledge cutoff limits LLMs – they lack information about events or data that occurred after a certain date. Questions like “What are the latest COVID-19 travel restrictions?” or “Who won the Best Actor Oscar in 2025?” refer to facts likely unavailable in the model’s training data. In these cases, the system must fetch up-to-date information to provide accurate answers. Even if an LLM knew a lot during training, that knowledge becomes outdated as the world moves on. Time-sensitive queries (those about recent news, current statistics, “today’s” events, etc.) highlight the importance of adaptive retrieval. A robust RAG system should detect when a question is asking about something current or evolving and then route the query to a real-time information source (like a news feed or web search) rather than relying on stale internal knowledge. In summary, adaptivity isn’t just about query difficulty – it’s also about temporal relevance, ensuring the AI’s answers remain current. Techniques that imbue the model with time awareness (for example, providing the current date or contextual cues about recency) can help it recognize when to pull in fresh data.

This article provides an in-depth exploration of Adaptive Retrieval-Augmented Generation (ARAG). We’ll discuss the shortcomings of traditional RAG, define what adaptive RAG entails, examine methods to implement adaptivity (including special attention to time-aware retrieval), and illustrate how these concepts come together in practice – particularly in the context of ZBrain Builder, an enterprise AI platform that exemplifies agentic and adaptive RAG principles.

- Retrieval-augmented generation (RAG): An overview

- Challenges with traditional RAG

- What is adaptive retrieval-augmented generation (ARAG)?

- Benefits of adaptive RAG

- Techniques for RAG adaptivity

- Incorporating time awareness (TA) in retrieval

- What is time-aware adaptive retrieval (TA-ARE)?

- Implementing adaptive RAG in practice

- ZBrain’s approach and perspective

Retrieval-Augmented Generation (RAG): An overview

RAG is a framework that integrates LLMs with external knowledge sources to produce more informed answers. Instead of relying solely on the LLM’s fixed parametric memory, a RAG system actively retrieves relevant documents or data based on the user’s query and feeds that information into the generation process. The typical RAG workflow has two main stages:

-

Retrieval: The system takes the user’s query and searches across one or more knowledge bases for supporting information. This could involve a vector similarity search in an internal document index, a keyword search on the web, a database lookup, or other domain-specific queries. The result is a set of relevant texts (passages, articles, records, etc.), often called context documents.

-

Generation: The retrieved context is appended to the original question (usually in a prompt template), and the combined text is passed to the LLM. The LLM then generates an answer that draws upon the provided context as needed. Essentially, the model is prompted with, “Here is some reference information; now use it to answer the question.”

By augmenting the prompt with external information, RAG enables the model to produce answers that are both up-to-date and grounded in facts. This approach addresses several challenges: it fills knowledge gaps on topics the model has never encountered during training, it provides authoritative sources that reduce open-ended guessing, and it can significantly reduce hallucinations by tethering the generation to real reference text. For example, a question about a niche scientific discovery in 2025 can be answered by retrieving the relevant research article and letting the LLM summarize or explain it, which would be impossible for a standalone model trained only on data up to 2021.

However, traditional RAG, as originally conceived, is not selective about retrieval. Classic RAG pipelines perform the retrieval step for every single query, regardless of whether the query actually needs external input. The design assumption was that more information is always better – if the model already knows the answer, extra context won’t hurt, and if it doesn’t, the context will help. In practice, this assumption doesn’t always hold, leading to the efficiency and quality issues discussed next.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

Challenges with traditional RAG

Although RAG enhances LLM performance in many scenarios, the naïve use of retrieval for every query (sometimes referred to as “blind retrieval”) has notable drawbacks. Key challenges include:

Blind retrieval for all queries

Standard RAG indiscriminately fetches documents for every question, treating a simple factoid and a complex analytical query the same way. This can lead to unnecessary steps for easy queries. Research has noted that many questions can be answered using just the LLM’s internal knowledge, yet traditional RAG still fetches external documents unnecessarily, wasting time and computing resources. Always retrieving also risks pulling in irrelevant data that doesn’t actually answer the question. Since the pipeline isn’t aware of whether the LLM could handle it alone, it may add noise to an otherwise straightforward query. In short, indiscriminate retrieval can be inefficient and sometimes counterproductive.

Outdated knowledge and knowledge gaps

LLMs have incomplete knowledge of recent events or highly specific “long-tail” facts. That’s precisely why RAG exists – but even so, a naive RAG system might fail if the retrieval component isn’t intelligent. If the user asks about something after the LLM’s training cutoff (e.g., a news event from last month), a traditional LLM would be clueless. Similarly, if the query is about an obscure detail (say, a minor character in a niche novel), the answer might not be in the model’s memory. Traditional RAG will attempt to retrieve information for such queries, but it might not know how or where to look effectively, especially for very new or specialized topics.

Sometimes the relevant info isn’t in the indexed knowledge base at all. In those cases, a static RAG approach still might not yield correct answers if the retrieval step isn’t smartly used – for example, if it searches the wrong knowledge source or uses poor search terms (this is a limitation by design). Furthermore, LLMs lack “new world” knowledge by default and struggle with long-tail knowledge that wasn’t prominent in their training data. Without a clever retrieval strategy, RAG won’t fully overcome those gaps.

Inference cost and latency

Performing retrieval and processing retrieved documents incurs overhead. Each query in a RAG system might involve multiple database lookups or API calls, followed by feeding a larger prompt (the question + retrieved text) into the LLM for inference. This added work increases latency – the user waits longer for answers – and compute cost (especially if calling external APIs or using a more expensive model to handle longer prompts). In enterprise settings, this can translate to higher operational costs and slower response times for end-users. Many simple queries don’t justify that overhead. In a customer service bot, for instance, a question like “What is your return policy?” might trigger a retrieval from a policy document every single time, even though the answer is always the same and could be handled directly from a cached answer or the model’s knowledge. Over thousands of queries, that inefficiency adds up.

In summary, while RAG is powerful, a non-adaptive implementation that treats all queries uniformly can lead to wasted effort, higher costs, and, in some cases, even worse answers (if irrelevant context distracts the LLM). These challenges set the stage for Adaptive RAG, which seeks to make the retrieval process smarter and more situation-aware.

What is Adaptive Retrieval-Augmented Generation (ARAG)?

Adaptive Retrieval-Augmented Generation refers to a class of techniques and systems that dynamically decide whether or not to retrieve external information for a given query – and if so, how much retrieval to perform. In essence, an ARAG system performs an intelligent triage on each incoming question. The system evaluates the query (and sometimes the LLM’s initial response or confidence) to judge if the LLM likely “knows” the answer by itself. If the model’s built-in knowledge is deemed sufficient, the system forgoes retrieval and lets the LLM answer directly using its parametric memory. If the question appears to require additional knowledge – for example, it involves a recent event or a very specific fact – the system engages the retrieval component and provides the LLM with external context before responding. In more advanced setups, the retrieval can even be scaled in complexity: perhaps a single quick lookup for a moderately hard question, versus multiple iterative lookups (multi-hop reasoning) for a very complex query.

In other words, adaptive RAG introduces a conditional or selective step into the pipeline: the system asks, “Do I need to retrieve, or can I answer directly?” This contrasts with traditional RAG’s unconditional retrieval-every-time approach.

Benefits of Adaptive RAG

By matching the effort to the problem complexity, adaptive RAG offers several advantages:

-

Efficiency: Easy queries get handled immediately by the LLM alone, avoiding the time and expense of needless retrieval. This can significantly speed up responses for trivial questions and reduce API calls or database load. Overall, the compute and token usage is better aligned with the query’s needs, saving resources in aggregate.

-

Focused retrieval for challenging queries: For difficult or knowledge-intensive questions, the system proceeds with retrieval to ensure a complete and accurate response – in fact, it can perform additional retrieval steps if needed. Adaptive RAG ensures that complex queries still invoke the full power of RAG (and even multi-step search) so that accuracy is maintained or improved relative to a static single-step approach. Essentially, it avoids under-searching on the hard cases while avoiding over-searching on the easy cases.

-

Reduced noise: By not retrieving when it’s unnecessary, the system minimizes the injection of irrelevant context. The LLM’s prompt remains concise and focused for queries it can handle, which can lead to clearer, more direct answers. This also reduces token consumption, as you’re not cluttering the prompt with unnecessary documents.

-

Balanced accuracy and cost: Studies have found that an adaptive approach can achieve accuracy on par with always-retrieve systems, but with far fewer retrieval calls. For instance, one hybrid prompting method was shown to answer questions as well as a static RAG baseline while triggering retrieval only a fraction of the time. This translates to a better cost-performance trade-off: you pay for retrieval and longer prompts only when they’re likely to pay dividends in answer quality.

-

Dynamic scaling: Adaptive RAG enhances system scalability across diverse query types. It can handle a mix of queries (simple factual ones, complex analytical ones, time-sensitive ones) without a one-size-fits-all latency. Users asking easy questions get snappy answers, while those with complicated requests implicitly allow the system to take a bit longer and do extra work to ensure a correct answer. This dynamic adjustment creates a more responsive and satisfying overall user experience.

In summary, Adaptive RAG tailors its strategy to query difficulty, skipping retrieval for trivial factoids, performing a single lookup for moderately complex queries, and executing multi-step retrieval for multipart or challenging questions. Being selective prevents unnecessary work: easy queries don’t waste time searching databases, while difficult ones get the full pipeline. Overall, this adaptive approach enhances efficiency without compromising the quality of the answers.

Techniques for RAG adaptivity

Adaptive RAG systems must intelligently decide whether a query warrants external retrieval. Three common techniques enable this decision-making:

Threshold-based calibration

A simple method involves generating an initial response without retrieval and evaluating model confidence—via token probabilities or prompt-based self-assessment. If confidence falls below a predefined threshold, the system triggers retrieval. Alternatively, thresholds can be set based on question complexity (e.g., keyword count, syntactic patterns). While this is easy to implement, calibrating the threshold across domains and models is challenging; overly strict settings can miss needed retrieval, while loose ones reduce efficiency.

Gatekeeper classifier models

Instead of static thresholds, trained classifiers—often lightweight models or small LLMs—predict retrieval needs. These models categorize queries (e.g., “simple,” “moderate,” “complex”) and guide whether to skip, perform single-step, or multi-hop retrieval. Classifiers leverage features such as query length, named entities, or temporal indicators. This method offers greater nuance than thresholds but requires ongoing training and tuning as data patterns evolve.

LLM-based prompting

Another emerging approach is to prompt the LLM itself to assess if it needs external information. Prompts like: “Today is [DATE]. Should you retrieve information to answer this question: [QUERY]?” elicit “Yes” or “No” responses. If “Yes,” the system retrieves and re-prompts; if “No,” it proceeds with generation. Variants include asking the LLM to explain its reasoning or flag uncertainty, which helps reduce overconfidence. These zero- or few-shot methods require no training but heavily depend on the quality of the prompt and model consistency.

Each technique has strengths and trade-offs. Many advanced systems combine them—e.g., using prompt-based gating with fallback thresholds—to maximize precision. All aim to reduce unnecessary retrieval while preserving answer quality.

Next, we examine how adaptive systems manage time-sensitive queries using temporal awareness.

Incorporating Time Awareness (TA) in retrieval

In adaptive RAG, it’s not just how hard a question is that matters, but also what kind of information it needs. Temporal factors play a crucial role. A system might determine that a query is easy in a general sense, but if it asks for current information, even a trivial question could necessitate retrieval (because the model’s training knowledge is outdated).

Let’s explore the importance of time awareness:

Why time matters

Time-sensitive knowledge is any information that can change with time – news events, weather, new research findings, product releases, etc. LLMs are typically trained on data up to a certain cutoff date (e.g., GPT-4’s knowledge as of early 2023). Anything after that, the model wouldn’t have seen. So when a user’s query deals with “the latest” on something or explicitly mentions a date or time frame beyond the model’s knowledge cutoff, the LLM’s otherwise strong abilities won’t help – it simply doesn’t have that information. For example, if today is 2025-11-04 and someone asks, “What happened at the UN climate summit in October 2025?” – a standard pretrained LLM without external augmentation will not know, because that event occurred after its training.

From an enterprise perspective, time matters for internal data as well. Imagine an AI assistant for a company’s knowledge base: if it’s answering questions about policies or data that get updated regularly (say, quarterly financial figures, or an HR policy updated last week), a time-aware approach is needed to ensure the assistant fetches the most recent version of the information, rather than relying on an old cached answer.

In short, time creates knowledge gaps: as time passes, an LLM’s once-correct knowledge can turn incomplete or incorrect. A robust RAG system needs to recognize queries that require timely information and adjust its retrieval strategy accordingly. One key advantage of RAG is precisely that it enables access to the latest information for generating reliable outputs – but to leverage that, the system must know when to tap into fresh data.

Identifying time-sensitive queries

How can a system detect that a query is time-sensitive? There are a few heuristic cues and strategies:

-

Explicit date or time references: Queries that contain dates (e.g., “in 2025, …”, “last year”, “this month”) or words like “today”, “current”, “latest”, “recent”, “new” are strong indicators that the user expects up-to-date info. For example, “What is today’s weather in Paris?” clearly cannot be answered from a static knowledge base from last year. A query like “latest news on X” or “recent developments in AI” similarly implies that the user wants the most up-to-date or very recent events. An adaptive system can employ simple keyword checks: if the query contains specific terms, mark it as requiring a real-time lookup. In the research literature, these are sometimes referred to as temporal query indicators.

-

Known evolving topics: Some topics are inherently time-linked, even if not explicitly stated. For instance, queries about COVID-19 policies, sports scores, tech product releases, etc., are likely asking for current data. If the system has domain awareness, it might flag queries in certain categories as time-sensitive. For example, a question like “What is the price of Bitcoin?” doesn’t mention a date, but the price changes daily – a savvy system would treat that as time-dependent by default.

-

Outdated answers in memory: Another approach is to let the LLM attempt an answer from memory and see if it produces a clue that it might be outdated. If the model answers in the past tense (“As of 2021, …”) or expresses uncertainty about recency (“I don’t have the latest data, but…”), the orchestration could catch that and decide to retrieve a fresh answer. This is a bit more advanced and not foolproof, but it’s a way for the system to notice that its internal knowledge might be out-of-date.

In practice, combining these cues is most effective. By detecting phrases that hint at time sensitivity, the system’s initial query analysis can tag the query as requiring live retrieval. This might route the query to a different tool (e.g., a web search API) or a special index of recent documents.

Temporal adaptation in prompts

Beyond simply deciding whether to retrieve up-to-date information, time awareness can also be incorporated into the LLM’s reasoning process through prompting. One simple yet powerful technique is to explicitly inform the LLM of the current date or context, allowing it to reason about what might be outside its knowledge. For example, a prompt might start with: “Today’s date is November 4, 2025.” Then, when the model sees a question, it can internally compare the dates. If the question asks about something in 2025, the model knows that’s “today” or recent, and that it likely has no training knowledge of it, thus it should seek external info. This is exactly what the TA-ARE method does (a prompting strategy for adaptive retrieval): prefixing the prompt with “Today is [date]” nudges the model to realize which questions involve post-training events.

Another prompting strategy is to include a few-shot examples highlighting time awareness. For instance, you might give the model an example QA pair: “Q: Who won the World Cup in 2026? A: [Yes]” (meaning yes, retrieval is needed, because 2026 is in the future relative to training) and another: “Q: Who won the World Cup in 2010? A: [No]” (meaning no retrieval needed, as that info is likely in training data). By providing demonstrations of when to use external resources and when not to, the model can learn the pattern.

Time awareness can also influence how retrieval is done. A time-adaptive system might automatically include a date filter or sort in its search. For example, if the query is “What are the newest features of Product Z?”, the retrieval component could prioritize documents from the past year. Some search engines allow queries like “Product Z features 2025” to ensure that results are fresh. In an enterprise setting, the system might search the latest version of documentation or recent meeting notes rather than older archives.

In summary, handling time sensitivity in adaptive RAG involves: (1) identifying queries that likely need fresh data (through cues or model introspection), and (2) using strategies (prompting or specialized retrieval queries) to ensure the model gets the timely information it needs. By doing so, the system remains aware of its temporal knowledge boundary – it “knows what it doesn’t know” with respect to time and compensates via retrieval. Next, we’ll take a deeper dive into the TA-ARE approach as a concrete example of time-aware adaptive retrieval in action.

What is Time-Aware Adaptive Retrieval (TA-ARE)?

To illustrate adaptive RAG concepts, consider Time-Aware Adaptive Retrieval (TA-ARE), a method introduced by Zhang et al. in 2024. TA-ARE specifically aims to help an LLM decide when to retrieve information by making it aware of time and knowledge limitations, all through prompting (no extra training). Let’s break down the key aspects:

What is TA-ARE?

TA-ARE is essentially a prompting strategy for adaptive retrieval. It augments the LLM’s prompt with temporal context and a few examples, allowing the model to output a decision on whether external knowledge is needed for a given question. In other words, TA-ARE turns the retrieval decision into a task that the LLM can perform on the fly. The output is binary: “[Yes]” or “[No]” to the question “Do I need to retrieve info to answer this?”. By being “time-aware,” it explicitly reminds the model of today’s date, which is a cue to help it realize if a question is asking about something beyond its training data.

The strength of TA-ARE is its simplicity: no separate classifier or threshold is required, no fine-tuning of the model – just a cleverly designed prompt. Despite this simplicity, it was found to be effective at improving adaptive retrieval decisions.

How TA-ARE works

The core of TA-ARE is the prompt template. It looks roughly like this (simplified):

Today is {current_date}.

Given a question, determine whether you need to retrieve external resources, such as real-time search engines, Wikipedia, or databases, to answer the question correctly. Only answer "[Yes]" or "[No]".

Here are some examples:

1. Q: {Example question 1 that needs retrieval}

A: [Yes]

2. Q: {Example question 2 that needs retrieval}

A: [Yes]

3. Q: {Example question 3 that does NOT need retrieval}

A: [No]

4. Q: {Example question 4 that does NOT need retrieval}

A: [No]

Question: {User’s question}

Answer:

Let’s unpack this:

-

The first line Today is {current_date} explicitly injects the current date. If today’s date is, say, Nov 4, 2025, the model now knows any question referencing events after 2023 (for example) might be something it needs to look up.

-

The instruction then clearly tells the model its task: to decide if external resources are needed, output only “[Yes]” or “[No]”.

-

Then we have examples. TA-ARE uses four demonstration examples in the prompt. Two are “[Yes]” examples (questions where retrieval was necessary), and two are “[No]” examples (questions answerable by common knowledge). The clever part is how they select these examples: for the “[Yes]” cases, they choose questions from their test set that the model previously got wrong when it didn’t retrieve, implying that those are cases where retrieval would have been helpful. This automatically gives strong hints of the kind of scenarios where the model lacks knowledge. The “[No]” cases are manually created simple questions (or ones the model can easily answer).

-

Finally, the user’s actual question is posed, and the model is expected to output “[Yes]” or “[No]” accordingly.

When this prompt is run, if the model answers “[Yes]”, the system will then proceed to perform a retrieval (e.g., conduct a web search or query the knowledge base) and feed the resulting text into another prompt to obtain the final answer. If the model answers “[No]”, the system trusts the model to answer directly without retrieval.

The result is an LLM-driven retrieval decision checkpoint that inherently accounts for time (via the date) and for the model’s own weaknesses (via the examples of failures). It’s like asking the model: “Do I already know this or should I look it up?” – with some coaching in the prompt to make that decision more reliable.

Because TA-ARE doesn’t require training new models or setting manual thresholds, it’s very practical. It essentially transforms the problem into a prompt engineering exercise, leveraging the LLM’s capabilities. And since it’s just text, it can work with closed-source models too (as long as you can prompt them), making it a flexible solution.

Effectiveness

According to the study and subsequent evaluations, TA-ARE proved to be a simple yet effective method for adaptive retrieval. It helped LLMs better assess when they needed outside help, improving the overall question-answering accuracy on their mixed dataset without incurring the overhead of always retrieving. Notably, it achieved this without requiring extensive parameter tuning or additional training, which is a significant advantage for maintainability.

In comparative tests, approaches like vanilla prompting (simply asking the model if it’s certain) were insufficient – models would either over-retrieve or under-retrieve because their self-assessment was unreliable. TA-ARE’s prompt design with the date and examples significantly outperformed those naive approaches, and came close to the performance of an oracle (ideal) strategy that knows exactly which questions need retrieval. In essence, TA-ARE closed much of the gap between a non-adaptive system and a perfectly adaptive one, at a fraction of the complexity.

One can imagine, however, that TA-ARE’s effectiveness might depend on the quality of the examples and the assumption that recent/obscure = needs retrieval. There could be edge cases (e.g., a very recent question that the model happened to see in training, if the cutoff was late, or the data got updated). However, by and large, the method addresses two major categories (temporal and long-tail knowledge) where retrieval is typically required.

Now that we have discussed the adaptive RAG conceptually and through the specific lens of TA-ARE, let’s shift to a practical viewpoint: how one might implement adaptive RAG in a real system, and how these ideas manifest in an enterprise-grade platform like ZBrain Builder.

Implementing Adaptive RAG in practice

Designing and deploying an adaptive retrieval-augmented generation system requires orchestrating multiple components in a decision flow. Here we outline a general approach to building such a system, and then we’ll see how tools and frameworks can assist:

Multi-stage decision flow

A typical adaptive RAG pipeline can be thought of as a flowchart with decision nodes. One straightforward design is:

-

Query analysis stage: When a query arrives, first run a quick analysis to decide if retrieval is needed. This could be a classifier model prediction (simple/moderate/complex), a prompt to the LLM to gauge confidence (as in TA-ARE or other prompting strategies), or even heuristic rules (like checking for certain keywords). This stage outputs a decision: e.g., “No retrieval”, “Retrieve once”, or “Retrieve multiple times”. It’s effectively the gatekeeper stage.

-

Retrieval stage (conditional): If the decision is to retrieve, the system then formulates a query (which might just be the original question or a refined version of it) and searches the knowledge source. The knowledge source might be an internal vector database of enterprise documents, a web search engine, or any relevant data store. If multiple retrieval rounds are allowed (for complex queries), this stage might loop – for instance: retrieve -> see partial answer -> decide to retrieve more -> and so on, until criteria are met.

-

Knowledge integration and answer generation: If retrieval was done, the retrieved snippets are vetted for relevance (perhaps discarding obviously off-base results) and then combined with the original question to feed into the LLM. If no retrieval is done, the original question alone is sent to the LLM. In either case, the LLM now generates the final answer. Optionally, if multiple pieces of information were gathered (like in a multi-step process), another component might synthesize or merge them before prompting the LLM.

Optionally, a final post-answer check can be in place: for example, verifying that the answer cites the retrieved sources or verifying that the answer actually addresses the question. If not, the system might decide to do an additional retrieval or rephrase the query and try again. This is more relevant in high-accuracy-demand scenarios.

The above flow ensures that a retrieval step is encapsulated by logic that can skip or repeat it as needed. At runtime, a simple query likely takes the path: Query Analysis -> (decide no retrieval) -> Answer via LLM immediately. A complex query may go: Query Analysis -> (decide retrieval needed) -> Retrieve -> Integrate -> (maybe Query Analysis again on a sub-question or after seeing partial info) -> possibly another Retrieve -> … -> Generate Answer.

This multi-stage orchestration can be implemented in code with if/else logic, loops, or more sophisticated orchestration frameworks or dynamic workflow engines. The complexity depends on how advanced the adaptivity is meant to be.

Tools & frameworks

Building an adaptive RAG system from scratch can be involved, but fortunately, there are emerging frameworks that help manage these decision flows and agent-like behaviors:

-

LangChain and LangGraph: LangChain is a popular framework for developing LLM agents, and it provides abstractions for chains (sequential processes) and agents (which can use tools like web search). An extension of LangChain called LangGraph enables defining workflows as a graph of nodes (each node could be an LLM call or a tool call), complete with conditional branches. This is very useful for adaptive RAG because you can visually or programmatically lay out the decision nodes (e.g., a classifier node feeding into a conditional branch: if “needs retrieval” go to a search node, else go to an answer node). In fact, LangGraph was explicitly designed to handle complex agentic flows, making it easier to orchestrate retrieval decisions and iterative loops in a reliable way. By using such a framework, developers can implement adaptive behavior declaratively, rather than manually handling all control logic.

-

Orchestration platforms: Tools like ZBrain Builder (from ZBrain) or other enterprise AI orchestration platforms offer a higher-level interface for creating these flows. ZBrain Builder, for instance, offers a low-code, visual interface to configure multi-agent or decision-driven workflows. Under the hood, it leverages frameworks (such as LangGraph or others) that you can add. This can accelerate the implementation of adaptive RAG in an enterprise context, as it allows for the setup of retrieval gating logic and knowledge base connections with minimal coding. Other orchestration frameworks include Microsoft’s Semantic Kernel and Google ADK, which also allow for conditional logic and the use of external tools.

-

APIs for real-time data: To integrate real-time information, developers can use APIs (e.g., search engine APIs, news feeds). Many LLM agent frameworks have tools to call web search or other APIs. Ensuring your pipeline has access to such a live data tool is essential for time-sensitive queries. For example, one might incorporate a “WebSearchTool” that is only invoked if the query is classified as time-sensitive.

-

Vector databases and indexes: On the retrieval side, having a well-maintained vector index of your knowledge base allows rapid similarity search for relevant documents. Tools like FAISS, Qdrant, or Pinecone can be used for this. An adaptive system might even maintain multiple indices (e.g., one for static knowledge like documentation, and one that’s continuously updated with recent info or user-generated content). Then the decision logic could choose which index to query based on context (for instance, use the “recent updates” index if a question is time-sensitive). Some frameworks can handle this routing of queries to different indexes automatically through a router component.

-

Evaluation & feedback loop: Implementing adaptive RAG isn’t just about the live system – you also need to test and refine it. One might use logging to see how often retrieval was triggered and whether it correlated with better answers. Human-in-the-loop evaluation or user feedback can highlight cases where the system should have retrieved but didn’t (or vice versa). This feedback can then be used to adjust the classifier threshold, retrain the gatekeeper model, or add more prompt examples to the LLM gating prompt. Over time, the system “learns” when to retrieve more accurately.

The combination of these tools essentially gives you an agentic system – the LLM (or a set of LLM agents) can make decisions (like whether to use a tool, which tool to use, etc.). In adaptive RAG, the main decision is “Use the retrieval tool or not?” which can be considered a simplified form of an agent deciding on an action.

Real-time data integration

Since adaptivity often entails recognizing the need for up-to-date info, integrating real-time data sources is a key practical aspect. There are a few ways to achieve this:

-

Periodic knowledge base updates: If you have an internal knowledge base (e.g., company documents), ensure it’s updated frequently. An adaptive system could detect that something is not found in the current KB and might then query a broader source (like the web). Some enterprise systems allow for the scheduled ingestion of new documents (for instance, ingesting new files daily into the vector store).

-

Hybrid search strategies: Implement both vector similarity search and keyword search. Vector search is great for semantic relevance, but for very recent info, a keyword search on a live index or web might be better. An adaptive retriever might try vector search first on a static index, and if that yields nothing useful (or if the query has temporal markers), it could then fall back to a web search. This ensures that if the answer isn’t in the known documents, the system has a chance to find it externally on the fly.

-

Temporal indexing: Some advanced designs involve time-tagged indexes. For example, maintaining snapshots or segmented indices by year. Then, if a query asks “in 2023, what was…”, the system can direct the search to the 2023 slice of data specifically. This is more relevant in large-scale archives or news repositories.

-

Caching recent responses: If certain time-sensitive queries repeat (e.g., “What’s the weather now?” asked by many users), the system can cache the latest answer for a short period. Strictly speaking, this is not about retrieval adaptivity, but it improves efficiency. The adaptive part is recognizing that a question is time-based, retrieving the answer once from an API, and then reusing it for a window of time.

One must also consider that real-time integration comes with challenges: external search APIs may have rate limits, data sources may be unreliable or have varying formats, and the system needs to parse and filter the retrieved information for the LLM. All these need to be handled gracefully. Often, developers include a fallback – if the real-time search fails or yields no results, the system may then attempt to answer with what it knows (with a disclaimer, if appropriate). Maintaining a good user experience means that even when retrieval cannot obtain fresh information, the system should respond sensibly (“I’m sorry, I cannot find the latest information on that”).

Overall, implementing adaptive RAG involves carefully designing the control flow and leveraging the right tools to execute it. It’s an investment in making the QA system smarter and more efficient. Next, we’ll examine how these principles are exemplified in ZBrain’s approach, showcasing a real-world application of agentic, adaptive RAG in an enterprise AI assistant.

ZBrain’s approach and perspective

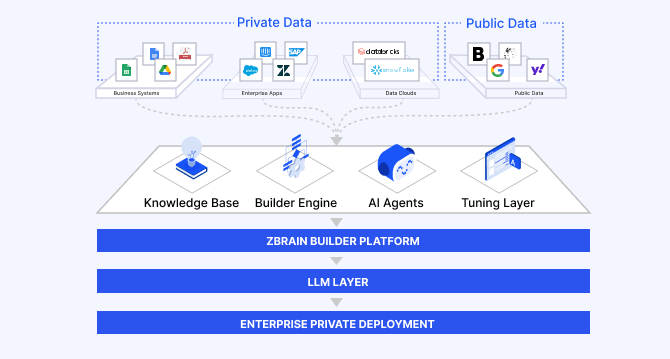

ZBrain Builder is a modular agent orchestration platform purpose-built for enterprise AI. One of its key capabilities is enabling agentic retrieval—a paradigm where autonomous agents make retrieval decisions dynamically as part of their reasoning workflows. While agentic retrieval is not synonymous with adaptive RAG, it is a powerful enabler of it. ZBrain Builder leverages this approach to deliver fine-grained, context-aware, and efficient Retrieval-Augmented Generation across enterprise knowledge systems. Alongside, ZBrain Builder’s Agent Crew orchestration enables multiple agents to collaborate using modular retrieval via KB search tools and external sources, guided by Model Context Protocol (MCP) for context-aware, time-sensitive decisions.

Agentic retrieval in ZBrain Builder

In traditional RAG systems, retrieval is a fixed step—every query triggers a document fetch, regardless of whether it is necessary or not. ZBrain Builder replaces this static behavior with agentic decision-making. Within ZBrain Builder, every query is processed by an orchestrated network of intelligent agents that evaluate whether retrieval is needed, where to retrieve from.

In enterprise use cases, this adaptivity is critical. For example, the agent may skip retrieval for well-known facts, conduct shallow lookup for moderately complex questions, or activate a deep retrieval plan involving multiple tools for hard, multi-hop queries. ZBrain utilizes LangGraph-based orchestration underpins these dynamic flows, allowing real-time branching, retries, and tool invocation across agents.

Multi-agent collaboration with retrieval tools

ZBrain Builder also supports Agent Crew orchestration, where multiple specialized agents collaborate to fulfill complex tasks. Within these crews, retrieval is a modular capability—not tied to a single model or service. Agents can use ZBrain’s built-in knowledge base search tools or call external sources (e.g., APIs, databases, or real-time feeds) depending on the nature of the query and its time sensitivity.

-

For instance, a Retrieval Agent might query the knowledge base.

-

A separate Database Agent might handle SQL queries to live systems.

-

A Validator Agent could cross-check the retrieved results for compliance or relevance.

Because these agents can work in parallel and invoke tools as needed, ZBrain Builder enables a highly adaptive RAG architecture—one that is both modular and composable. Retrieval is no longer a monolith, but a flexible and intelligent action within an evolving reasoning loop.

Modular, time-aware, and contextual

This adaptive RAG design allows ZBrain Builder to deliver several advantages:

-

Selective retrieval: The system determines whether retrieval is necessary, thereby avoiding wasteful or redundant lookups.

-

Time-aware strategies: ZBrain agents detect time-sensitive queries and prioritize fresh data or invoke real-time tools if a prompt indicates that it requires recent data.

-

Modular scaling: New agents, retrievers, or data connectors can be added without changing the core workflow—ideal for evolving enterprise environments.

In summary, agentic retrieval in ZBrain Builder enables adaptive RAG by giving agents the autonomy to reason about when, how, and from where to retrieve—making enterprise AI both more efficient and contextually aligned. It moves beyond the rigid RAG pipelines of yesterday and toward an architecture that adapts to user intent, query complexity, and enterprise knowledge in real time.

How adaptive retrieval logic is used in ZBrain Builder

Every query in ZBrain Builder passes through an initial decision node that effectively asks, “Do I (the AI agent) already know the answer, or should I retrieve additional information?” This is implemented as the first step of the agent’s reasoning. When a user question is received, the agent will internally evaluate it and determine whether to consult the knowledge base.

In practice:

-

The agent (LLM) might be prompted to check its confidence or knowledge – akin to the prompting strategy we discussed (the exact method may be proprietary, but it aligns with the idea of the agent being self-aware of its knowledge limit).

-

If the agent decides no retrieval is needed, the workflow simply skips to generating an answer. If retrieval adds no value (e.g., the query is trivial or can be answered from memory), the flow terminates quickly. This saves time and cost, as no further processing is done beyond the LLM formulating an answer from what it already knows.

-

If the agent decides retrieval is required, it proceeds to call the retrieval tool (the knowledge base search).

This kind of gating logic mirrors exactly the concept of adaptive RAG: the system itself makes a judgment call on retrieval. Under the hood, this could be implemented via a small prompt to the LLM or via heuristics; the key is that the agent has an opportunity to decide, not just retrieve by default.

Time and context awareness

ZBrain’s agentic RAG is also context-aware in choosing which data sources or tools to use. Enterprises often have multiple knowledge silos, including documentation, databases, an internal wiki, and possibly even internet access for specific queries. A rigid system might only search one index, but ZBrain’s agents can route queries intelligently. For example, a routing agent could decide that a query about employee records should be directed to a SQL database, whereas a query about general product information should be routed to the documentation knowledge base. If a question seems to be about very recent news (e.g., “What is the weather today?”), the agent could invoke an external API or web search, provided it’s configured to do so.

By factoring in the context and intent of the query, ZBrain’s orchestration can dynamically choose the appropriate tool. This is how time-awareness might manifest: if it recognizes a query as time-sensitive (based on context or phrasing), it might engage a “live data” tool rather than relying on a possibly stale knowledge base. In ZBrain Builder, connectors known as Model Context Protocol (MCP) connectors enable agents to interact securely with external systems and APIs. An agent could use an MCP connector to fetch the latest information from, for example, a company news feed or a web search for the most recent article on a topic, and integrate that into its response.

In practical terms, ZBrain Builder functions as a coordinated ecosystem of agents and tools working in concert to solve complex tasks. Some agents handle the decision (“should I search?”), some handle the action of searching, and some may handle other tasks (like calculations or API calls) as needed. They maintain an awareness of context.

Time-aware retrieval with prompt manager in ZBrain Builder

ZBrain Builder enhances retrieval precision through time-aware retrieval, enabled by its built-in prompt manager. When creating prompts within the prompt manager, users can append the current date and time, embedding temporal context directly into the prompt template using variables. This feature is particularly valuable in enterprise settings where data relevance often changes over time—such as legal updates, financial records, or time-bound policies.

Once configured, these time-stamped prompts can be seamlessly integrated into Flows or agents during knowledge base retrieval operations. This allows ZBrain solutions to intelligently surface the most temporally relevant information, ensuring that the AI response is grounded not just in the right content, but in the right timeframe.

By incorporating temporal context at the prompt level, ZBrain enables more context-aware decision-making and supports advanced use cases like regulatory compliance tracking, versioned document search, and real-time situational analysis—making its RAG (Retrieval-Augmented Generation) workflows significantly more robust and accurate.

Technical implementation (LangGraph and Orchestration)

Under the hood, ZBrain Builder leverages an orchestration framework to manage these complex interactions.

-

The framework’s role is to define the graph of nodes (decision nodes, tool nodes, etc.) and enforce the logic (e.g., if the agent says “need retrieval,” then call the tool node).

-

The LLM’s role is to carry out the reasoning at each step: interpreting the query, deciding on retrieval, formulating search queries, evaluating results, and ultimately generating the answer.

ZBrain Builder provides a low-code interface for workflow/agent design, but conceptually, it’s using these building blocks:

-

A classifier or router agent (could be an LLM prompt or model) at the start to determine query handling.

-

A knowledge base search tool that is agentic (as described).

-

Possibly other tools (like external search, database query) that can be invoked if the workflow calls for it.

-

A loop mechanism to allow query refinement if needed.

-

A final answer generation step using the main LLM agent.

The use of an orchestration framework is crucial for enterprise readiness because it allows explicit control and transparency. Each node in the workflow is defined and observable, which means the developers can monitor, log, and debug the process (important for enterprise compliance and troubleshooting). It also means that if a particular decision strategy isn’t working, it can be adjusted in isolation (for example, swapping out the prompt used in the retrieval-decision node, or adjusting the number of examples).

In essence, ZBrain Builder has adopted a modular, agent-based architecture: one can plug in different tools, define decision criteria, and the LLM agents will navigate that graph. This makes the system highly adaptable. For example, if an organization wants its agent to incorporate a new data source or follow a new rule (such as always verifying an answer from two sources before responding), the graph can be updated to accommodate this. The separation of concerns (workflow vs LLM reasoning) means the heavy-lifting LLM (like GPT-5) is used efficiently – it focuses on reasoning and language tasks, while the framework handles the procedural routing and integration.

Efficiency and impact for enterprise

The net result of ZBrain’s adaptive, agentic retrieval strategy is a system that scales efficiently in an enterprise setting while delivering accurate, context-rich answers. By avoiding unnecessary retrievals, it reduces latency and compute costs for the numerous routine queries that employees or customers may ask. By engaging in retrieval (and even complex multi-hop retrieval) for tough questions, it ensures that the answers don’t suffer in quality – difficult queries receive the additional knowledge and reasoning steps they require.

This blend of adaptability and temporal awareness is crucial for enterprise AI assistants. Enterprises deal with a broad spectrum of queries, ranging from simple FAQs to complex analytical questions, and from static historical data to breaking news that affects the business. A one-dimensional approach would either waste resources or fail to handle some cases well. ZBrain’s approach, as we’ve seen, tackles this by dynamically adjusting to each scenario.

For example, if an executive asks, “Generate a summary of our company’s financial performance in the last quarter,” the agent knows to pull the latest quarterly report from the knowledge base (since it’s time-bound data) and maybe even cross-check figures from a financial database. If another user asks, “What is the capital of France?” in the same system, the agent will simply answer “Paris” without bothering the document store – a trivial case handled instantly. This kind of responsiveness fosters user trust because the AI appears to “know” when it needs to look something up versus when it can simply answer, which makes its responses faster and often more precise.

From an efficiency standpoint, ZBrain’s KB logging has likely shown a reduction in the average number of documents retrieved per query and a decrease in average response time for easy questions, because of adaptive retrieval. At the same time, user satisfaction with hard questions improves because the system is more likely to retrieve the right information and produce correct answers (instead of either guessing incorrectly or giving an “I don’t know” if it has no retrieval).

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

Endnote

ZBrain Builder demonstrates how agentic (tool-using) behavior and adaptive retrieval logic can be fused to create an enterprise AI that is both smart and efficient. It validates in a real product what research has been suggesting: that selective retrieval – deciding when to retrieve and how to tailor that retrieval – is a game-changer for building reliable, scalable AI assistants. By learning from each query and continuously incorporating new data (via updated knowledge bases or real-time search), such a system remains current and accurate.

Adaptive RAG, especially when combined with an agentic orchestration platform like in ZBrain, represents a next step in the evolution of AI assistants: moving beyond static QA systems to ones that actively reason about their own knowledge and actions. This leads to interactions that feel more natural (the AI doesn’t overdo things when it’s not necessary) and more trustworthy (the AI seeks information when it should, instead of making things up). For any organization looking to deploy AI on top of their ever-changing, growing bodies of data, this approach is likely to become the gold standard – ensuring both precision and efficiency in information retrieval and generation.

Design agents that reason, retrieve, and act contextually. Explore how ZBrain Builder enables you to operationalize adaptive RAG for real-world impact. Book a demo today!

Listen to the article

Author’s Bio

An early adopter of emerging technologies, Akash leads innovation in AI, driving transformative solutions that enhance business operations. With his entrepreneurial spirit, technical acumen and passion for AI, Akash continues to explore new horizons, empowering businesses with solutions that enable seamless automation, intelligent decision-making, and next-generation digital experiences.

Table of content

- Retrieval-augmented generation (RAG): An overview

- Challenges with traditional RAG

- What is adaptive retrieval-augmented generation (ARAG)?

- Benefits of adaptive RAG

- Techniques for RAG adaptivity

- Incorporating time awareness (TA) in retrieval

- What is time-aware adaptive retrieval (TA-ARE)?

- Implementing adaptive RAG in practice

- ZBrain’s approach and perspective

Frequently Asked Questions

What is Adaptive Retrieval-Augmented Generation (RAG)?

Adaptive RAG is an advanced variant of standard RAG architecture that intelligently decides when and how to retrieve external knowledge. Unlike traditional RAG systems that retrieve documents for every query, adaptive RAG selectively performs retrieval based on the nature and complexity of the question. This decision-making process enhances efficiency, reduces latency, and improves response quality by avoiding unnecessary retrievals.

Why is adaptivity important in RAG pipelines?

Static RAG pipelines treat all queries the same—triggering retrieval even for simple questions that an LLM can answer from its internal knowledge. This leads to redundant processing, increased latency, and sometimes lower answer quality due to the injection of irrelevant or noisy context. Adaptive RAG addresses these issues by employing techniques such as confidence thresholds, classifier models, or LLM-based prompting to retrieve information only when necessary.

What are the key techniques used to implement Adaptive RAG?

Three common techniques include:

-

Threshold-based calibration, where retrieval is triggered based on query complexity or confidence scores.

-

Gatekeeper classifiers, which use a separate ML model to predict whether retrieval is needed.

-

LLM-based prompting, where the LLM itself decides (via prompt engineering) if external knowledge is required before answering.

These methods may be used individually or in combination to fine-tune retrieval behavior based on context and performance goals.

How does adaptive RAG handle time-sensitive queries?

Adaptive RAG incorporates time-awareness by detecting temporal cues in user queries (e.g., “latest update,” “as of 2025”) and dynamically fetching the most recent data. This is crucial because LLMs have fixed knowledge cutoffs and cannot answer real-time questions without up-to-date retrieval. Time-aware adaptive RAG ensures the model fetches fresh, relevant content when the query context demands it.

What are the business advantages of Adaptive RAG for enterprises?

Enterprises benefit through:

-

Reduced latency and cost by skipping unnecessary retrievals.

-

Improved accuracy by focusing retrieval on complex or time-sensitive queries.

-

Greater scalability, with optimized resource usage across high query volumes.

-

Regulatory alignment, as selective retrieval, limits the injection of outdated or incorrect knowledge in critical workflows.

How does ZBrain Builder implement adaptive retrieval—both in terms of deciding when to retrieve and the architecture that enables this decision-making?

ZBrain Builder employs a modular, agentic orchestration approach in which retrieval is treated as a dynamic capability—not a fixed pipeline step. At the core of this architecture is the Agent Crew model, where specialized agents—each with defined capabilities—collaborate to solve complex tasks. These agents are coordinated using Model Context Protocol (MCP), which ensures structured memory sharing, tool access control, and consistent context handoff across agents during execution. This orchestrator coordinates task execution, retrieval logic, and decision routing across the agent network.

When Agentic Retrieval is enabled during KB setup, each agent in the crew can independently assess whether a query warrants external retrieval. This assessment considers multiple factors, including query complexity, confidence thresholds, time sensitivity, and knowledge coverage.

Key aspects include:

-

Retrieval as a tool: Instead of hardcoded logic, retrieval is implemented as an on-demand tool that agents can invoke as part of their reasoning loop. This enables agents to retrieve from knowledge bases, call APIs, or connect with real-time data sources only when needed.

-

Tool integration layer: ZBrain agents can leverage third-party search tools, internal databases, or proprietary KBs as modular retrieval backends. These are pluggable into the reasoning workflow, allowing dynamic selection based on the context of each query.

-

Temporal and contextual routing: The MCP ensures

that time-sensitive ordomain-specific queries are routed to appropriate retrievers—e.g., selecting real-time search vs. static KB lookup depending on the inferred user intent. -

Adaptive decision flow: Retrieval decisions are embedded within the reasoning flow graph (via orchestration framework), enabling agents to evaluate intermediate outcomes and revise retrieval strategies mid-execution if necessary.

This architecture enables retrieval to be both context-aware and agent-governed, ensuring optimal information access while minimizing unnecessary lookups. The result is a scalable, efficient, and intelligent enterprise RAG framework aligned with the principles of modularity and adaptive AI.

What makes ZBrain Builder’s approach to adaptive RAG unique?

ZBrain’s uniqueness lies in its modular, agentic design. It allows:

-

Agent crews orchestrate multi-step reasoning with specialized agents.

-

Tool-based retrieval, where a KB search tool is called only when needed.

-

Framework-based orchestration, enabling branching logic and conditional workflows.

This makes retrieval a skill, not a rule—enhancing flexibility and contextual precision.

What performance or scalability benefits does adaptive RAG bring to ZBrain customers?

ZBrain’s adaptive RAG reduces response times by avoiding retrieval for trivial queries and focuses resources on complex tasks. This not only improves answer quality but significantly reduces compute/API overhead, making enterprise AI deployments faster, leaner, and more cost-efficient—especially at scale.

Why should enterprise leaders prioritize adaptive RAG in AI deployments?

Adaptive RAG aligns with enterprise priorities by enabling intelligent, resource-efficient, and scalable AI solutions. It ensures:

-

Operational efficiency, through selective processing.

-

Compliance-readiness, by reducing misinformation risks from outdated knowledge.

-

Strategic adaptability, by future-proofing AI assistants with modular, dynamic capabilities.

ZBrain’s implementation exemplifies how adaptive AI architecture can deliver trustworthy, real-time, and business-aligned automation.

How do we get started with ZBrain™ for AI development?

To begin your AI journey with ZBrain™:

-

Contact us at hello@zbrain.ai

-

Or fill out the inquiry form on zbrain.ai

Our dedicated team will work with you to evaluate your current AI development environment, identify key opportunities for AI integration, and design a customized pilot plan tailored to your organization’s goals.

Insights

A guide to intranet search engine

Effective intranet search is a cornerstone of the modern digital workplace, enabling employees to find trusted information quickly and work with greater confidence.

Enterprise knowledge management guide

Enterprise knowledge management enables organizations to capture, organize, and activate knowledge across systems, teams, and workflows—ensuring the right information reaches the right people at the right time.

Company knowledge base: Why it matters and how it is evolving

A centralized company knowledge base is no longer a “nice-to-have” – it’s essential infrastructure. A knowledge base serves as a single source of truth: a unified repository where documentation, FAQs, manuals, project notes, institutional knowledge, and expert insights can reside and be easily accessed.

How agentic AI and intelligent ITSM are redefining IT operations management

Agentic AI marks the next major evolution in enterprise automation, moving beyond systems that merely respond to commands toward AI that can perceive, reason, act and improve autonomously.

What is an enterprise search engine? A guide to AI-powered information access

An enterprise search engine is a specialized software that enables users to securely search and retrieve information from across an organization’s internal data sources and systems.

A comprehensive guide to AgentOps: Scope, core practices, key challenges, trends, and ZBrain implementation

AgentOps (agent operations) is the emerging discipline that defines how organizations build, observe and manage the lifecycle of autonomous AI agents.

How ZBrain breaks the trade-offs in the AI iron triangle

ZBrain’s architecture directly challenges the conventional AI trade-off model—the notion that enhancing one aspect inevitably compromises another.

ZBrain Builder’s AI adaptive stack: Built to evolve intelligent systems with accuracy and scale

ZBrain Builder’s AI adaptive stack provides the foundation for a modular, intelligent infrastructure that empowers enterprises to evolve, integrate, and scale AI with confidence.

Automated AI workflows with ZBrain: Flows, LLM agents and orchestration patterns

ZBrain enables enterprises to design workflows that are intuitive for teams, efficient in execution, and adaptable to evolving business needs—transforming automation into a strategic advantage.