ZBrain Builder’s AI adaptive stack: Built to evolve intelligent systems with accuracy and scale

Listen to the article

As enterprise adoption of AI matures, a fundamental shift is underway: from rigid, one-size-fits-all architectures to modular, adaptive AI technology stacks. Today’s organizations operate in fast-moving environments where data sources evolve, governance requirements increase, and AI tooling advances rapidly. In this landscape, static platforms—built around fixed models, inflexible pipelines, or closed integrations—inhibit innovation, degrade accuracy over time, and slow time-to-value. What’s needed is not just smarter models, but a smarter AI infrastructure—one that can adapt to changing requirements, integrate best-in-class components, and continuously optimize how AI is delivered and governed.

Analyst firms are reinforcing this direction. Gartner has cited “Adaptive AI” as a strategic trend—not solely in the behavior of AI, but in the architectures that support it. Forrester goes further, emphasizing that AI initiatives must be built on adaptive process automation to avoid becoming obsolete. McKinsey urges a shift from monolithic, model-centric deployments to modular, orchestrated platforms designed for agility, reuse, and governance. These insights point to a clear imperative: enterprises must architect their AI capabilities for structural adaptability, not just algorithmic intelligence.

ZBrain Builder’s AI adaptive stack is built for precisely this need. It is a technology stack designed to adapt—not only in how it supports a variety of AI models and tools, but in how it orchestrates them to fit enterprise-specific workflows, compliance rules, and evolving business priorities. It allows teams to plug in best-fit models (LLMs, search engines, evaluators), dynamically route tasks through the most appropriate tools, and refine outputs through continuous monitoring—all within a governed, modular architecture. Unlike static platforms, ZBrain Builder’s AI stack is not constrained by pre-defined logic or vendor lock-in; it is composable, extensible, and policy-aware by design.

In this article, we explore what makes a technology stack truly adaptive, and why that distinction matters for enterprise-scale AI. We define the key dimensions of adaptability—across data integration, model abstraction, workflow orchestration, and evaluation feedback—and demonstrate how ZBrain Builder delivers on each. We examine the pitfalls of conventional AI stacks, showcase ZBrain Builder’s flexible architecture in real-world scenarios like multilingual customer support and legal contract review, and illustrate how its adaptability enables continuous performance gains, faster innovation, and future-proof governance.

For organizations looking to operationalize AI at scale, adaptability isn’t a feature—it’s the foundation. ZBrain Builder’s AI adaptive stack provides the foundation for a modular, intelligent infrastructure that empowers enterprises to evolve, integrate, and scale AI with confidence.

The challenge: Why traditional AI stacks fail

Despite heavy investments and promising pilots, many enterprise AI initiatives fail to scale. A recent MIT study revealed that only about 5% of corporate AI projects deliver measurable value or ROI. McKinsey likewise found that fewer than 10% of AI use cases deployed in enterprises ever progress beyond the pilot stage to wide adoption. What causes this drop-off? In large part, traditional AI stacks are too rigid and static to keep up with real-world complexity and change.

Key limitations of conventional AI architectures include:

- Static, brittle pipelines: Traditional AI solutions are often built as fixed pipelines – data is ingested, a model is trained, and a deployment pipeline serves outputs. These pipelines don’t easily accommodate changes. If data distributions shift or business requirements evolve, the whole pipeline must be re-engineered. Such systems lack mechanisms for ongoing learning or adjustment. As a result, performance stagnates or degrades over time due to model drift and unhandled exceptions. Without adaptability, even well-designed AI quickly becomes obsolete in dynamic environments.

- Model lock-in and siloed AI: Enterprises frequently get locked into a single model or vendor. A company might build around a single ML model or large language model (LLM), only to find that it underperforms on new tasks. Traditional stacks often cannot seamlessly swap in a better model or ensemble of models. This “one-size-fits-all” approach limits agility. As Accenture notes, organizations need flexible, modular architectures that can select the right LLM for the right task—essentially a switchboard for models to future-proof their AI systems. Conventional platforms rarely offer such flexibility, leaving companies stuck with suboptimal tools or forced to pay for expensive redevelopment to change models.

- Brittle integrations and isolated systems: Typical AI projects solve one narrow problem in isolation. They often require custom code to integrate with databases, APIs, or business workflows – integrations that are brittle and hard-coded. Scaling AI across an enterprise means connecting many systems and data sources. Traditional stacks, not built for orchestration, often break when tasked with handling complex, multi-step processes. As McKinsey observes, early LLM deployments were largely “passive,” unable to drive multi-step workflows or make decisions without human prompts. Without a robust orchestration layer, AI can’t autonomously handle branching logic or coordinate multiple steps, leading projects to falter beyond simple use cases.

- Lack of governance and feedback loops: Perhaps most critically, legacy AI stacks lack built-in governance, monitoring, and feedback mechanisms. There is often no systematic way to evaluate AI outputs in production, no feedback loop to continuously retrain or refine the model, and weak oversight of AI decisions. This results in two problems: (1) Quality plateau – the AI doesn’t improve after deployment because it isn’t learning from mistakes or new data, and (2) Risk accumulation – errors or biases go undetected until they cause a failure or compliance issue. Gartner predicts that 30% of GenAI projects will be abandoned by the end of 2025 due to issues like poor data quality and inadequate risk controls. Without strong governance, organizations either run into regulatory/safety roadblocks or lose confidence in AI, causing projects to stall. In today’s environment of heightened AI oversight (for instance, U.S. federal agencies introduced 59 AI-related regulations in 2024, more than double the prior year), the inability to govern and audit AI behavior is a showstopper for scaling.

In summary, traditional AI stacks reach a performance ceiling because they are static and isolated by design. They excel at solving a narrow problem under fixed conditions, but they struggle to adapt when conditions change or when asked to handle broader, evolving tasks. This rigidity leads to high failure rates in enterprise AI deployments—the so-called “pilot purgatory” — where promising prototypes never become scalable, reliable business solutions. To break out of this plateau, a fundamentally different approach is needed: one that emphasizes continuous learning, modular flexibility, and built-in governance. Adaptability must be engineered into the AI architecture from the ground up.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

What is an AI adaptive stack?

An AI adaptive stack refers to a multi-layered AI architecture designed for continuous learning and dynamic optimization across all its layers. Instead of a static pipeline or a single monolithic model, an adaptive stack allows reconfiguring its workflows, swap in the best-suited models or tools, and fine-tune its outputs in response to real-time feedback. In essence, it is an AI architecture built to adapt at every level – from how it orchestrates tasks, to which models or algorithms are applied, to how results are evaluated and improved over time.

There are four core dimensions that characterize an AI adaptive stack:

- Model adaptability: The ability to change, retrain, or switch out models on the fly to meet current needs. This could mean continuously retraining an ML model as new data arrives, or dynamically selecting among multiple models (e.g., choosing the best language model for each query). Model adaptability ensures that no single model becomes a bottleneck; the AI’s “brain” can evolve. This flexibility greatly improves resilience and accuracy over static one-model approaches.

- Data adaptability: The ability to integrate new data sources and updated information in real-time. AI adaptive stacks continuously ingest fresh data ( user interactions, sensor readings, documents, etc.) and update their knowledge base. They often employ retrieval-augmented generation (RAG) and knowledge graphs to keep the AI’s knowledge up to date. In effect, the AI system “knows” about the latest facts and context from enterprise data streams, reducing hallucinations and stale answers. Data adaptability is crucial because it grounds AI reasoning in reality – an adaptive system is always synchronized with the single source of truth in the business.

- Workflow adaptability: The ability to reconfigure and orchestrate the sequence of tasks or steps the AI takes, based on the situation. Traditional AI might follow a fixed script; an adaptive system can adjust its workflow logic. This involves dynamic decision-making (if one approach fails, try another), conditional branching, and integrating new tools or APIs as needed. In practice, this is achieved with an intelligent orchestration layer that can route tasks to different agents or sub-processes. It’s also enabled by low-code or composable workflow design, so that adding a new step or integration doesn’t require rebuilding from scratch. Workflow adaptability means the AI can solve more complex, multi-step problems and respond to edge cases by altering its plan on the fly.

- Evaluation (Feedback) adaptability: Perhaps most distinctively, adaptive AI has feedback loops that evaluate its own performance and enable learning. This means implementing continuous monitoring, quality checks, and even self-critique mechanisms. For example, an AI system may include a secondary evaluator that scores the primary model’s output and triggers an automatic revision if it’s unsatisfactory. Over time, these iterative loops (often guided by human-in-the-loop input as well) lead to improved outcomes – essentially a built-in continuous improvement process. This evaluation layer ensures the AI system doesn’t blindly repeat mistakes; it adapts its behavior based on what works and what doesn’t, aligning with the classic Deming cycle of plan-do-check-act, but at machine speed and scale.

ZBrain Builder’s implementation of this concept is central to its platform: it orchestrates by continuously routing each request through the optimal path of models and processes, ensuring the system learns and improves with each interaction. Key elements of the AI adaptive stack include:

- Orchestration of multiple models (LLMs) – The stack coordinates a portfolio of AI models rather than relying on any single model. No single AI model excels at every task, so ZBrain Builder’s orchestration layer allows users to evaluate each query and routes it to the model (or even a non-LLM method) best suited for the job. A task requiring precise data retrieval might invoke a domain-specific model or a knowledge base lookup, while a creative natural-language task might leverage a large generative LLM.

- Agent-based modularity – The AI adaptive stack is composed of modular, agentic components that can be assigned specific functions and then dynamically combined. Instead of one single model handling everything, ZBrain Builder employs multiple expert modules or AI agents – for example, one agent might specialize in interpreting user intent, another in performing calculations or database queries, and another in composing a final report. These agents collaborate within a governed framework, allowing complex tasks to be broken into subtasks that each agent can handle. This modular design makes the system extensible and robust: new capabilities can be added as new agents without disrupting the whole. Industry research underscores the value of this approach, noting that adaptive platforms leverage AI agents’ unique strengths (reasoning, specialized skills, cross-component collaboration) to handle strategic planning and complex scenarios.

- Automated intelligent routing – At the heart of the adaptive stack is an intelligent orchestration layer that automatically routes each task to the right modules and tools. Rather than relying on predefined, rigid workflows or having users manually choose how to process a request, the system dynamically selects the optimal workflow. It analyzes the input and context, then decides, for instance, to invoke a particular agent, call an external tool, or use a specific model based on what’s most effective. This kind of AI-driven control flow replaces hard-coded decision trees with a much more flexible approach. ZBrain Builder’s architecture provides this missing conductor: it ensures that each query takes the most efficient and accurate path through the system, improving performance and consistency without burdening the user with choices.

- Continuous evaluation and feedback loops – An AI adaptive stack doesn’t just set processes in motion – it continuously monitors and learns from outcomes. ZBrain Builder’s stack includes evaluation mechanisms at multiple points (for example, quality checks on an answer, policy compliance checks, and user feedback collection). These evaluations feed back into the system to correct and improve future performance. If an answer isn’t accurate or a decision path underperforms, the stack allows users to adjust parameters or route similar future queries differently. This closed-loop learning enables the AI solution to improve over time in a controlled way. In practice, this means the longer ZBrain applications run in an organization (with proper oversight), the smarter and more fine-tuned they become, driving up accuracy and reliability month after month.

- Multi-layer adaptability and governance – The “stack” in AI adaptive stack implies that adaptability is engineered into every layer: from the high-level workflow design, to the model and agent layer, down to the data and evaluation layer. Each layer can be adjusted independently – workflows can branch or re-order based on context, models can be switched or updated without rewriting the entire system, and new tools can be plugged in as needed. Crucially, all this adaptability is governed. ZBrain Builder’s architecture embeds governance and control at each layer of the stack: every action is logged, important decisions can require human approval or fall within predefined policies, and outputs can be filtered for compliance. This ensures that agility never comes at the expense of oversight. In other words, the AI adaptive stack is adaptable but not anarchic – it’s adaptive by design, within the guardrails of enterprise rules and ethics. Such frameworks must support not only flexible orchestration but also robust governance, data protection, and IP protection as core capabilities, underscoring that effective adaptivity goes hand in hand with accountability.

In summary, an AI adaptive stack is the architectural answer to a fast-changing AI landscape. By orchestrating multiple AI models and agents, automating decision flows, and integrating continuous learning and governance, this approach ensures that an enterprise AI platform like ZBrain Builder can improve accuracy, scale efficiently, and adapt safely as needs evolve. It provides a future-proof foundation: new AI advancements or business requirements can be incorporated into the stack’s layers rather than forcing a redesign. For CXOs and technology leaders, this means AI solutions that deliver immediate value and have the built-in agility to meet future demands – all while upholding the reliability and compliance standards the enterprise requires. The AI adaptive stack thus turns AI from a static tool into a living, evolving asset for the organization’s long-term innovation strategy.

Why adaptive architecture matters for the enterprise

For C-level executives and enterprise decision-makers, investing in an adaptive AI architecture like ZBrain Builder’s is not just a technical preference—it is rapidly becoming a strategic necessity. Here are the key business advantages of an adaptive AI approach:

- Continuous accuracy improvement: Adaptive AI systems can achieve higher levels of accuracy and quality over time compared to static models. By leveraging continuous feedback loops, they don’t settle for baseline performance at go-live—they keep learning from every interaction, error, and feedback. This is crucial in enterprise settings, where even a few percentage points of improvement in prediction accuracy or relevance can translate into millions of dollars saved or earned.

- For example, a static document-processing AI agent might achieve 85% accuracy on day one and stay there, whereas an adaptive one might start at 85% and quietly climb to 90%+ as it ingests more documents and learns corrections. Over thousands of transactions, that difference dramatically reduces manual rework and costly errors. Adaptability thus directly drives better outcomes and ROI. It’s telling that industry analysts identify continuous learning as key to AI fulfilling its promise – we are seeing the re-emergence of continuous improvement at enterprise scale, this time fueled by AI, as Forrester noted. Enterprises that adopt this mindset will see their AI initiatives yield compounding returns, rather than depreciating performance.

- Resilience to change: Business conditions change constantly – from market trends and customer behavior to regulatory rules and product lines. An adaptive AI architecture is inherently more resilient in the face of such change. Because the system can adjust to new data and dynamically adjust workflows, it can absorb shocks and shifts without requiring a full redesign. This gives enterprises agility.

- Consider customer service: if a sudden surge of support tickets comes in about a new issue, an adaptive system could detect the pattern, pull in relevant new data (e.g., an updated FAQ), and adjust its responses accordingly. A static system would continue to give outdated answers until a human reprograms it (by which time customer satisfaction may already have suffered). In a broader sense, adaptability future-proofs AI investments – the architecture can incorporate new advancements (such as a more powerful model or new data integration) with less friction. Organizations don’t have to “rip and replace” their AI core as new technology emerges; an adaptive stack can evolve incrementally. This resilience is vital for the long-term scalability of AI. It also mitigates risk: if one component fails or becomes obsolete, the system can route around it or update it, rather than collapsing entirely.

- Enhanced governance and compliance: Ironically, adaptability – when done right – can improve governance rather than weaken it. Because AI adaptive stacks like ZBrain Builder are designed with monitoring and human oversight in mind, they provide enterprises with more visibility and control. Every change to the system (a model update, a workflow adjustment, a decision) can be tracked and audited. Audit trails and versioning in ZBrain Builder mean you know exactly which model or agent produced a given output and what data it accessed. This level of transparency is hard to achieve in ad-hoc AI projects. Moreover, adaptive AI systems can respond to new governance requirements faster. If a new regulation or ethical guideline is introduced, the AI policy rules can be centrally updated, and all agents will adapt their behavior immediately – rather than manually updating numerous siloed models or applications. As regulatory pressures on AI intensify (with frameworks focusing on AI transparency, fairness, and safety rolling out globally, having an architecture that can adapt to new rules is a strategic safeguard. It turns compliance from a roadblock into just another parameter the AI can optimize for. In short, adaptive architecture ensures that AI remains aligned to business values and regulations, even as it autonomously evolves. Governance is baked into the system’s learning process, not an afterthought.

- Faster innovation and time-to-value: Adaptive AI architectures accelerate the innovation cycle. Because they are modular and continuously learning, teams can experiment, get feedback, and iterate far more rapidly.

Additionally, adaptive AI systems often come with low-code interfaces and reusable components, which means prototyping new AI-driven processes takes days or weeks, not months. The AI adaptive stack effectively becomes an innovation sandbox for the enterprise: business users and data scientists can configure new flows, try different models, and instantly see results with live data, all under proper governance. This speed matters because the competitive landscape is unforgiving – being able to deploy AI solutions quickly and improve them continuously can be a decisive edge. In business terms, adaptability equates to agility—the ability to respond to opportunities (or disruptions) faster than others.

In summary, an adaptive AI architecture like ZBrain Builder isn’t just a technical luxury; it directly supports strategic business goals. It boosts accuracy (meeting performance KPIs that static systems might never achieve), increases resilience (keeping operations steady amid change), reinforces governance (ensuring trust and compliance), and accelerates innovation (delivering new capabilities sooner and refining them in real-time). In aggregate, these translate to a significant competitive advantage.

For enterprise leaders, the message is clear: to unlock AI’s full potential and avoid stagnating projects, adaptability must be a core design principle.

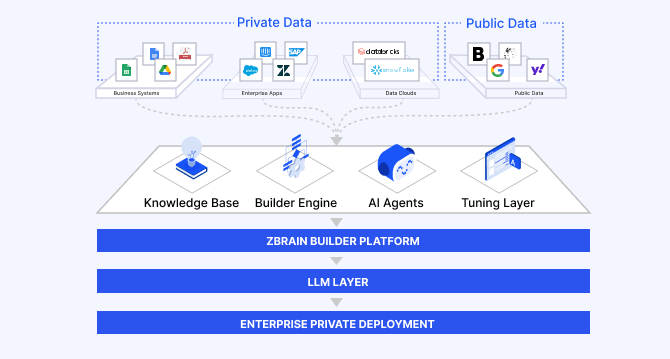

Inside ZBrain Builder’s AI adaptive stack

The ZBrain Builder platform was built from the ground up to support an AI adaptive stack for enterprises. is organized into key capability layers, each contributing to adaptability in a specific dimension. The following table outlines the core layers of ZBrain Builder’s AI adaptive stack and what each provides:

|

Layer (Intelligence) |

Description |

Capabilities |

|---|---|---|

|

Data intelligence |

Ingests and organizes enterprise data into a unified knowledge base for AI applications to draw context from. |

ZBrain Builder supports vector and graph indexes to manage unstructured and structured data, enabling all agents and workflows to perform RAG grounded in current enterprise knowledge. With built-in preprocessing and dynamic data updates, it ensures high accuracy, reduced hallucinations, and adaptive AI awareness. |

|

Model intelligence |

Provides a model-agnostic LLM layer, enabling ZBrain Builder to integrate with a range of AI models (cloud APIs such as OpenAI, Anthropic, and Google Vertex, or on-premises models). |

ZBrain Builder enables dynamic model selection, allowing organizations to use the best model per task and avoid vendor lock-in. Its flexible architecture routes queries to specialized models and supports seamless upgrades without system overhaul. |

|

Workflow (Orchestration) intelligence |

Orchestrates task flow using a low-code Flow interface. ZBrain Builder offers an intuitive Flow canvas with pre-built connectors (for APIs, databases, etc.) and logic blocks to design end-to-end processes. |

ZBrain Builder enables you to design complex workflows visually, utilizing triggers, conditions, loops, and parallel logic. Its Agent Crew orchestration enables multiple specialized AI agents to collaborate under a supervisor, dynamically handling tasks like querying databases and summarizing results—ensuring adaptive, resilient automation as business needs evolve. |

|

Evaluation & memory layer |

Provides continuous monitoring, feedback, and learning capabilities. ZBrain Builder has a built-in evaluation suite with a Monitor module to benchmark agent performance continuously. |

ZBrain Builder enables continuous self-optimization through automated testing, evaluator-critic loops, and memory. Agents can validate outputs, retry until quality thresholds are met, and learn from past interactions—ensuring workflows improve over time using feedback, stored context, and long-term knowledge. |

|

Governance & Security |

(Cross-cutting layer) Ensures that adaptability does not come at the expense of control. ZBrain Builder has built-in governance features, such as role-based access control (RBAC), that determine who can create and deploy or modify agents and Flows. |

ZBrain Builder ensures enterprise-grade governance with full audit logs, guardrails, and human-in-the-loop checkpoints. Dashboards offer visibility into usage and outcomes, enabling safe, transparent, and policy-aligned AI operations—even as the system evolves. |

As shown above, ZBrain Builder’s architecture covers the full spectrum needed for adaptive AI. It unifies what otherwise might require many disparate tools – a workflow builder, an ML ops platform, a vector database, an evaluation framework, etc. – into one cohesive stack. This modular, unified design lets teams start small (perhaps by deploying a single AI agent or an automated workflow for one task) and scale up in complexity by simply enabling additional layers or adding more agents/Flows. Because all layers share the same platform, data flows freely (agents query the knowledge base directly), and monitoring is consistent end-to-end.

Critically, ZBrain Builder’s adaptive stack doesn’t force a choice between human control and AI autonomy – it balances both. Routine, predictable parts of a process can be handled by structured Flows (with full audit trails), while creative or unstructured tasks are handled by agents with reasoning capabilities. This hybrid of deterministic workflows and autonomous agents means the system can handle unpredictable situations (via AI agent reasoning) without sacrificing governance (since the flows provide checkpoints and structure). The result is an AI platform that is adaptable by design, but also enterprise-grade in terms of security and oversight.

In the next section, we’ll delve into how ZBrain Builder achieves adaptivity in action—examining features such as dynamic routing, agent modularity, feedback loops, and human-in-the-loop control that collectively make the system self-optimizing and intelligent.

How ZBrain Builder makes the AI stack adaptive

Adaptive orchestration with ZBrain Builder

At the heart of the adaptive stack is ZBrain Builder’s low-code orchestration engine, which transforms isolated AI operations into modular, context-aware, and dynamically executable workflows. Rather than relying on static chains of prompts or locked-in model logic, ZBrain Builder enables workflows that adapt intelligently at runtime based on input, task complexity, performance feedback, or business conditions.

ZBrain Builder makes the AI stack adaptive through three core capabilities:

Flow-based orchestration for dynamic routing

- Visual low-code environment to design intelligent flows with conditional logic, parallel branches, and failover strategies.

- Tasks are dynamically routed to the most appropriate model or agent based on real-time input characteristics (e.g., language, format, task type).

- Supports branching logic such as: “If the user input is in Spanish, first route it through a translation agent or multilingual model, then pass the translated query to the main LLM for response.”

- Enables fallback routes if a model fails, ensuring robust and uninterrupted execution.

Crew-based modular agent execution

- Workflows are executed via a team of modular agents, each with defined responsibilities (e.g., data extraction, analysis, summarization).

- A supervisor agent coordinates task delegation, validates intermediate outputs, and maintains logical task progression.

- Agents can be added, removed, or replaced at runtime without disrupting the larger workflow — enabling agility and experimentation.

- Mirrors human teamwork: ZBrain Builder’s orchestration engine behaves like a cross-functional AI crew, collaborating in real time to complete complex processes.

ZBrain Builder empowers enterprises to orchestrate modular, observable, and continuously tunable agentic operations. This architecture doesn’t just automate tasks — it creates workflows that learn, optimize, and adapt over time while staying aligned to enterprise rules and risk thresholds.

Plug-and-play flexibility with best-in-class tools

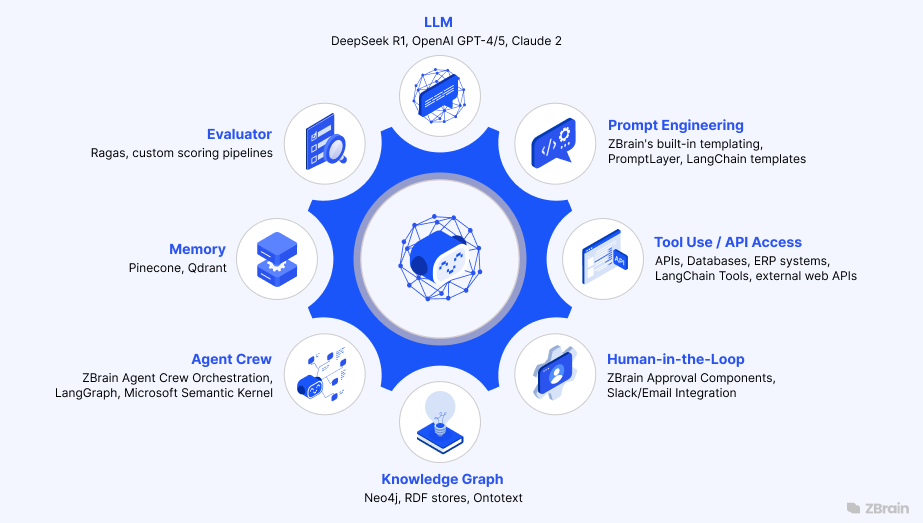

ZBrain Builder’s AI adaptive stack is designed as a modular, composable platform that integrates best-in-class models, APIs, evaluators, memory stores, and vector databases. Whether optimizing for reasoning, retrieval, voice, or evaluation, ZBrain Builder allows teams to connect the right tool for the right task—while ensuring the system remains orchestrated, observable, and extensible.

Here’s how ZBrain Builder supports plug-and-play adaptability across all layers:

Specialized models and reasoners

- Integrate high-performance LLMs like DeepSeek R1 for complex reasoning or use OpenAI Whisper for speech-to-text workflows.

- Leverage ElevenLabs for neural text-to-speech, or switch to lighter models depending on use case, latency, or cost.

Modular evaluation and memory integration

- Plug in Ragas to score LLM responses using automatic QA benchmarks and custom criteria.

- Add Mem0 to persist conversation history, domain context, or task memory across sessions.

Knowledge-rich retrieval with vectors and ontologies

- Pinecone and Groq can be used for fast, scalable retrieval through vector databases—ideal for embedding-heavy RAG workflows.

- ZBrain Builder allows agents to query these databases based on context, score, or workflow stage.

- Use Graph ontologies to enrich retrieval with semantic structure—enabling relationships between entities, intents, and outcomes to be understood at runtime.

- Example: Link “Product A” → “Policy X” → “Compliance Action” to answer multi-hop enterprise queries.

Orchestration layer interoperability

- ZBrain Builder can interoperate with orchestration frameworks like LangChain, Google ADK or Microsoft Semantic Kernel under the hood.

- Supports agentic execution, letting AI agents use tools, call APIs, or consult memory graphs—all orchestrated natively via ZBrain Builder.

Future-proofed by design

- Tools can be added, replaced, or deprecated without workflow rewrites—thanks to ZBrain Builder’s runtime flow engine and abstraction layers.

- AI architects remain in control of what’s active, what’s prioritized, and what’s measured—ensuring architecture evolves without compromising reliability.

By integrating semantic knowledge (graphs) and dense retrieval (vectors) alongside best-in-class model and memory integrations, ZBrain Builder makes its adaptive stack not only modular but also intelligent and contextual. This is what enables workflows to understand, reason, retrieve, and act fluidly—on demand.

Native intelligence orchestration in ZBrain Builder

The power of ZBrain Builder’s AI adaptive stack lies in its native orchestration and governance backbone, which ensures adaptability is not only modular but reliable, transparent, and enterprise-grade. ZBrain Builder serves as the central control system, orchestrating agents, tools, and flows across the stack with precision.

Here’s how ZBrain Builder’s native modules enable composability with control:

Flow interface for visual, low-code development

- Offers a low-code interface for visually building complex AI workflows with pre-built components.

- Supports rich logic structures: sequences, branching, looping, and conditional paths.

- Ideal for modeling agent-driven approval Flows, compliance checkpoints, or multi-step automation pipelines.

- Updates and optimizations are non-disruptive—Flows can be adjusted in real time without downtime or re-architecture.

Agent Crew framework for modular execution

- Enables agentic collaboration using structured supervisor-worker hierarchies.

- Each agent can operate autonomously: reasoning with LLMs, accessing APIs, triggering tools, or invoking sub-agents.

- New agents can be added or existing ones replaced without disrupting the overall system—ideal for scaling use cases or swapping in better tools.

- Example: ZBrain Builder can spin up a clause extractor agent, a risk evaluator agent, and a summarizer—all in a governed, trackable crew.

Built-in evaluation and optimization layer

- Automatically captures metrics like response time, token usage, satisfaction score, and more for every agent run.

- Feeds dashboards for visibility and analysis—teams can monitor success rates, flag anomalies, and spot optimization opportunities.

- Agents or Flows with poor performance can be fine-tuned or swapped quickly, ensuring the stack learns and improves continuously.

Internal feedback loops and evaluator agents

- ZBrain Builder supports evaluator agents—modules that score, critique, or approve generated outputs.

- These agents enforce quality gates within the workflow: low-quality outputs can trigger automatic retries or alternate routing.

Governance is built into every layer

- Every task, response, and decision is centrally logged and traceable—supporting audits and compliance reporting.

- Offers enterprise controls like role-based access, prompt versioning, and policy-based routing.

- Enables human-in-the-loop (HITL) checkpoints at any step—for example, escalating outputs to legal or compliance teams when thresholds are crossed.

Human oversight by design

- Workflows can require manual review at critical stages—such as policy decisions, risk ratings, or public disclosures.

- Supervisor agents can escalate to humans based on risk scores, confidence thresholds, or exception logic.

- Human corrections are captured as implicit feedback, forming part of the system’s learning loop.

Together, these native orchestration modules ensure ZBrain Builder isn’t just flexible—it’s governed, observable, and tunable at every level. Adaptation happens in a controlled environment, with guardrails that align AI behavior to enterprise policy, risk, and oversight requirements. This is how ZBrain Builder turns composability into competitive advantage—without compromising trust or control.

In summary, ZBrain Builder makes the AI stack adaptive by combining composability with control. Its architecture lets enterprises mix and match the best models, tools, and agent flows for each task, and dynamically optimize how those components interact as conditions change. Crucially, all this happens within a structured orchestration framework that provides transparency, safety, and governance at every step. Enterprises can decide which components to plug in, which performance metrics to optimize, and where to enforce human review or policy checks. This ensures that as the AI stack adapts and evolves, it remains aligned with business objectives and compliance standards. ZBrain Builder essentially offers structured adaptability with guardrails – an AI platform that is as flexible and innovative as you need, yet as secure and controllable as you demand. Such a balance makes ZBrain Builder a credible choice for enterprise AI leaders and architects who want agility without sacrificing oversight, allowing them to harness advanced AI capabilities in a reliable, enterprise-ready manner.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

The strategic edge: ZBrain vs. conventional AI platforms

In evaluating AI platforms, enterprise leaders should consider how adaptable each solution is. Many AI offerings on the market today still reflect a “first-generation” paradigm – they provide a model or a narrow solution that works in a silo, often requiring extensive custom code to integrate and are limited in their ability to learn post-deployment. ZBrain Builder’s AI adaptive stack represents a “next-generation” approach. Let’s compare at a high level ZBrain Builder’s adaptive, modular architecture vs. conventional AI platforms or point solutions:

- Continuous learning vs. static performance: Traditional AI platforms often treat model training as a one-off project – once the model is deployed, it may not improve unless a team manually gathers new training data and runs an offline retraining cycle. In contrast, ZBrain Builder is built for continuous learning in production. Its evaluation loops and feedback ingestion mean the system is always tuning itself. This leads to performance that can start strong and then improve, whereas a static system might degrade or, at best, remain stable. The strategic edge here is obvious: improvements in accuracy and quality over time translate into competitive advantage. In a world where data volumes and scenarios explode, an AI that doesn’t learn continuously will fall behind one that does.

- Modular and composable vs. one-size-fits-all: Many conventional AI platforms deliver a monolithic model or a closed service (e.g., a chatbot that only uses its built-in model). They can be hard to adapt to if your needs don’t exactly match, leading to vendor lock-in. ZBrain Builder, by contrast, offers a modular stack – you can choose models (even mix vendors or open-source models), configure custom agents, design workflows to your specific process, and integrate your proprietary data. It’s more like a construction kit than a finished house. This composability is a significant advantage for enterprises, enabling tailored solutions without starting from scratch. It also means faster adaptation to new opportunities: if a new AI model comes out that’s excellent at, say, code generation, a ZBrain user can plug that into their stack immediately; a less flexible platform might not support it until the vendor updates (if at all). Being able to incorporate the latest technology (or your own secret sauce model) gives you an innovation lead over competitors stuck with a fixed tool.

- Unified platform vs. fragmented tools: Organizations using conventional means often end up stitching together multiple tools – one for data prep, another for ML model serving, another for workflow automation, plus custom scripts for monitoring, etc. This fragmentation is not only inefficient, but it introduces failure points and inconsistencies (for example, monitoring might cover the model but not the end-to-end business process). ZBrain Builder’s AI adaptive stack provides a one-stop platform where all these pieces are designed to work in harmony. The benefit is increased cohesion and visibility: a CXO can gain a dashboard view of the entire AI operation – including data flowing in, decisions being made, outcomes, and feedback – rather than checking multiple systems. A unified adaptive AI platform ensures that improvements in one part (e.g., a better model) propagate fully through the workflow, and that the overarching governance catches issues in any part. In strategic terms, this translates into lower total cost of ownership and faster time to value, since you’re not gluing together pieces for each new AI project.

- Built-in governance vs. afterthought controls: Conventional AI solutions often require bolting on governance after deployment – e.g., manually adding audit logs, writing separate bias-detection scripts, or relying on human processes to catch mistakes. This reactive approach is prone to gaps and often slows down AI adoption. ZBrain Builder’s AI stack was built with governance from the ground up. Features like RBAC, audit trails, version control, and human approval loops are integral, not optional. This means enterprises can move faster with ZBrain Builder because compliance and risk checks are not hurdles; they are features.

- For example, when deploying a new AI agent in ZBrain Builder, you can immediately specify what data it’s allowed to access and what conditions would trigger a human review – all within the platform. A competitor lacking these would require custom development or might not even allow that level of control. The strategic edge is speed with safety: you deploy advanced AI capabilities, but with guardrails that satisfy stakeholders (legal, compliance, IT) from day one. In a survey context, one might say ZBrain Builder helps organizations reach high AI maturity – in a Gartner survey, 45% of organizations with high AI maturity said their AI initiatives remain in production for three years or more to ensure sustained impact and value.

- Agentic and proactive vs. reactive AI: Many AI platforms essentially provide reactive models – they respond to an input with an output (e.g., an API call returns an answer), but they do not drive processes autonomously. ZBrain Builder’s architecture is agentic – its AI agents can initiate actions, call tools, and make decisions within the scope you define. This means you can achieve autonomous workflow automation, not just point predictions.

- For instance, a traditional NLP API might answer a question about a document; a ZBrain agent can not only answer but also file the document in the right folder, email a summary to a colleague, and set a reminder to follow up – all orchestrated in one flow. This proactivity is a major differentiator. It aligns with what McKinsey calls the coming “age of AI agents” that can execute complex workflows, rather than just assist with single tasks. Companies leveraging AI agents can unlock far more value (imagine automating entire audit processes or customer onboarding journeys) than those using AI merely as an assistive tool. It’s the difference between AI as a clever widget vs. AI as a digital workforce. ZBrain Builder gives you the latter—a strategic asset that can transform operations, not just enhance them incrementally.

Ultimately, the strategic edge of ZBrain Builder’s AI adaptive stack comes down to adaptivity itself: the platform and the solutions built on it don’t stagnate. They keep up with your business and the world. Conventional AI platforms that lack this adaptability might deliver an early win (a good demo or short-term result) but often hit a wall. ZBrain Builder is designed to push through those walls, enabling AI that continues to add value. In competitive markets, this can be the difference between being a leader or an also-ran in AI-driven innovation. The organizations that implement adaptive AI architectures will be positioned not only to solve current problems more effectively but also to capitalize on change, turning shifting circumstances into an advantage, because their AI can learn and pivot faster than the rest.

Endnote

As we have seen, adaptability is the defining trait of the next generation of enterprise AI. Organizations that harness adaptive AI architectures will lead their industries, while those clinging to static solutions risk falling behind in innovation, agility, and performance. The technology and methodologies to get started are here today. ZBrain’s AI adaptive stack provides a proven framework for building AI systems that are dynamic, intelligent, and self-optimizing from the ground up. This is not a leap of faith into unknown territory – it’s a strategic move backed by industry research and real-world success stories.

For CXOs and enterprise tech leaders, the call to action is clear: make adaptability a core criterion in your AI strategy. Evaluate your current AI initiatives – are they learning and improving, or are they static deployments at risk of stagnation? Challenge your teams to infuse adaptive mechanisms (feedback loops, modular models, etc.) into new projects. Importantly, choose platforms and partners that enable this approach. ZBrain Builder is a compelling option, designed with exactly these goals in mind. By leveraging ZBrain Builder’s unified platform, you can accelerate the development of AI solutions that don’t just solve complex processes but keep getting better at solving them. Imagine your key operations – from customer service to supply chain to risk management – powered by AI that continuously adapts to make smarter decisions every day. That is the promise of the adaptive stack.

The evolution of AI follows a clear trajectory: from static to adaptive, from narrow to holistic, from off-the-shelf to tailor-made, and from one-shot deployment to continuous improvement. ZBrain Builder’s AI adaptive stack encapsulates this evolution, offering enterprises a pathway to leap into the era of AI that is as fluid and learning-oriented as the organizations it serves. The next evolution in enterprise AI is here – those who seize it will redefine what their businesses can achieve. Now is the time to embrace AI that adapts, and to make adaptability the cornerstone of your AI strategy.

Embrace adaptability at the core of your AI strategy. Leverage ZBrain Builder to build AI systems that learn, evolve, and align with your business in real time. Book a demo today!

Listen to the article

Author’s Bio

An early adopter of emerging technologies, Akash leads innovation in AI, driving transformative solutions that enhance business operations. With his entrepreneurial spirit, technical acumen and passion for AI, Akash continues to explore new horizons, empowering businesses with solutions that enable seamless automation, intelligent decision-making, and next-generation digital experiences.

Table of content

Frequently Asked Questions

What is an AI adaptive stack, and why does it matter now?

An AI adaptive stack is a modular, multi-layered architecture designed to dynamically optimize how AI systems process inputs, select tools or models, and evolve over time. It enables enterprises to build intelligent systems that are not statically bound to a single model or pipeline, but instead adapt to changes in data, use case complexity, and organizational needs. With AI rapidly evolving and enterprise contexts shifting frequently, adaptability is now essential—not optional—for achieving long-term accuracy, compliance, and ROI.

How is ZBrain Builder’s AI adaptive stack different from traditional AI platforms?

ZBrain Builder’s AI stack stands apart by combining low-code Flow orchestration, agentic reasoning, runtime model routing, embedded evaluation, and governance—all within a modular, pluggable framework. Unlike static RAG pipelines or single-model deployments, ZBrain Builder enables organizations to reconfigure agents, swap models, and reroute logic on the fly—without rebuilding workflows. This makes it not just intelligent, but resilient and enterprise-ready.

What business outcomes can a CXO expect from using ZBrain Builder?

CXOs can expect measurable improvements in four areas:

-

Accuracy: Adaptive routing and evaluation loops ensure high-quality outputs as inputs vary.

-

Scalability: Modular architecture supports new use cases and models without re-engineering.

-

Governance: Transparent decision paths, logging, and human-in-the-loop controls ensure compliance.

-

Efficiency: Automated feedback loops and runtime optimization reduce the need for manual model parameter adjustments.

Does ZBrain Builder support third-party tools like LangChain, OpenAI, or Pinecone?

Yes. ZBrain Builder is architected as a composable platform that supports integration with best-in-class external tools. It can incorporate models such as OpenAI or DeepSeek, vector databases such as Pinecone, evaluation frameworks such as Ragas, and orchestration tools such as LangChain or Google ADK. These tools can be swapped or upgraded as needed, giving enterprises full flexibility without vendor lock-in.

What role does governance play in ZBrain’s AI adaptive stack?

Governance is foundational to ZBrain’s architecture. It offers centralized prompt management, policy-based routing, audit trails, and human-in-the-loop checkpoints—all built into the Flow and Crew orchestration engines. Every decision made by an agent or model can be logged, reviewed, and traced back to source data or logic paths. This makes ZBrain solutions especially suited for regulated industries such as healthcare, finance, and legal.

Can ZBrain Builder support hybrid AI operations involving both humans and agents?

Absolutely. ZBrain Builder allows workflows to include designated human-in-the-loop steps at any stage. For instance, after agents complete document analysis, the flow can pause to allow a human to validate or override the AI’s recommendation before continuing. These checkpoints are configurable and logged, enabling AI automation with full enterprise oversight.

How does ZBrain Builder enable continuous learning and optimization without retraining models?

ZBrain Builder employs real-time evaluation agents and monitoring module that assess the quality of outputs during every run. If an answer doesn’t meet predefined thresholds, the system can retry with modified prompts, escalate to another agent, or log the outcome for later review. Over time, these micro-adjustments form a continuous learning loop—improving performance without manual retraining or hard-coded rules.

Which enterprise use cases are ideal for ZBrain?

ZBrain is ideal for enterprise use cases that demand high accuracy, adaptability, and strong governance. These typically involve scenarios that require complex decision-making, dynamic information retrieval, and cross-functional workflows. Examples include automated document analysis, multilingual support systems, regulatory compliance workflows, and intelligent enterprise search. Its architecture is especially effective in environments where processes span multiple data sources, tools, or compliance requirements.

How does ZBrain Builder manage performance at runtime across agents and models?

ZBrain Builder’s Flow and Agent Crew system allows real-time performance tracking. Each agent logs runtime metrics, including latency, token usage, and satisfaction scores. These metrics are fed into Flow and can trigger conditional logic (e.g., use a faster fallback model under load). This approach ensures both observability and runtime optimization, scaling performance without compromising control.

What makes ZBrain Builder a future-proof investment for enterprise AI strategies?

ZBrain Builder’s AI adaptive stack is model-agnostic, modular, and policy-aware—meaning it’s built to evolve with the enterprise. As new models, evaluators, or compliance needs emerge, they can be added or swapped into existing workflows without rewriting logic or changing architecture. This flexibility reduces technical debt, enables rapid innovation, and ensures the AI stack remains aligned with shifting business goals and tech landscapes.

How do we get started with ZBrain for AI development?

To begin your AI journey with ZBrain:

-

Contact us at hello@zbrain.ai

-

Or fill out the inquiry form on zbrain.ai

Our dedicated team will work with you to evaluate your current AI development environment, identify key opportunities for AI integration, and design a customized pilot plan tailored to your organization’s goals.

Insights

A guide to intranet search engine

Effective intranet search is a cornerstone of the modern digital workplace, enabling employees to find trusted information quickly and work with greater confidence.

Enterprise knowledge management guide

Enterprise knowledge management enables organizations to capture, organize, and activate knowledge across systems, teams, and workflows—ensuring the right information reaches the right people at the right time.

Company knowledge base: Why it matters and how it is evolving

A centralized company knowledge base is no longer a “nice-to-have” – it’s essential infrastructure. A knowledge base serves as a single source of truth: a unified repository where documentation, FAQs, manuals, project notes, institutional knowledge, and expert insights can reside and be easily accessed.

How agentic AI and intelligent ITSM are redefining IT operations management

Agentic AI marks the next major evolution in enterprise automation, moving beyond systems that merely respond to commands toward AI that can perceive, reason, act and improve autonomously.

What is an enterprise search engine? A guide to AI-powered information access

An enterprise search engine is a specialized software that enables users to securely search and retrieve information from across an organization’s internal data sources and systems.

A comprehensive guide to AgentOps: Scope, core practices, key challenges, trends, and ZBrain implementation

AgentOps (agent operations) is the emerging discipline that defines how organizations build, observe and manage the lifecycle of autonomous AI agents.

Adaptive RAG in ZBrain: Architecting intelligent, context-aware retrieval for agentic AI

Adaptive Retrieval-Augmented Generation refers to a class of techniques and systems that dynamically decide whether or not to retrieve external information for a given query.

How ZBrain breaks the trade-offs in the AI iron triangle

ZBrain’s architecture directly challenges the conventional AI trade-off model—the notion that enhancing one aspect inevitably compromises another.

Automated AI workflows with ZBrain: Flows, LLM agents and orchestration patterns

ZBrain enables enterprises to design workflows that are intuitive for teams, efficient in execution, and adaptable to evolving business needs—transforming automation into a strategic advantage.