Enabling automated AI workflows with ZBrain Builder: Flows, LLM-based agents and orchestration patterns

Listen to the article

Modern enterprises are leveraging AI not only for single tasks but as part of automated end-to-end workflows that drive efficiency and agility. By orchestrating AI tasks (e.g., LLM calls, data retrieval, API actions) in a pipeline, organizations can streamline processes, reduce human error and accelerate task completion. In practice, this means designating triggers (such as an API call, document upload, or user query) and then automating decision points, branching, and integrations so that routine decisions and data processing occur with minimal human intervention. For example, AI workflows can automate customer onboarding by generating emails, updating CRMs, and flagging exceptions without manual effort. Industry analyses note that such automation increases productivity and accuracy while freeing staff for higher-value work. In the AI era, this is taken further: generative AI—powered by large language models (LLMs) can inject intelligence into workflows (e.g. summarizing reports, answering queries, translating content), but they require careful orchestration to be reliable and scalable. A structured AI workflow – with prompts, agents, and integrated tools – provides the necessary framework, enabling enterprises to deploy GenAI solutions across domains like HR, finance, sales or legal with built-in guardrails and visibility.

This article examines how enterprises can operationalize this intelligence through structured AI orchestration. It explores the difference between autonomous agents and rule-based workflows, outlining where each fits in modern AI systems. You will also learn about common workflow patterns in ZBrain Builder, its architectural foundations, and best practices for designing scalable, reliable AI-powered processes.

Agents vs. workflows in AI orchestration

AI systems can act either as standalone agents or as parts of broader workflows. An AI agent is an autonomous software entity that perceives inputs, reasons (often via an LLM chain-of-thought), and takes actions – for example, an agent that writes code or manages calendar events. Agents encapsulate specific capabilities (booking flights, data analysis, etc.) and typically handle self-contained tasks; a single agent works well for bounded problems, while multiple agents may form teams for bigger challenges.

In contrast, an AI workflow is a coordinated process where multiple tasks, tools, or agents are orchestrated according to a predetermined or dynamic control flow. For instance, an agentic workflow might involve a sequence of LLM calls, database queries, API calls, and human review steps, branching or looping based on intermediate results. In essence, an agent acts with its own internal logic, whereas a workflow externalizes decision nodes and integrates components under a single execution plan.

|

Dimension |

Workflows |

Agents |

|---|---|---|

|

Architecture & traceability |

Explicitly encode each stage to ensure it is modular and traceable. Easier to monitor, audit, and debug with clear checkpoints. |

Harder to enforce checkpoints or fallbacks without orchestration. |

|

Integration vs. autonomy |

Invoke specific tools/services at each node. Engineers have fine-grained, explicit control (e.g., database/API calls). |

Dynamically decide which tools to call within their reasoning. Tool use is implicit and less predictable. |

|

Control vs. emergence |

Defined structure supports built-in control: timeouts, retries, and human-in-loop steps. Predictable and governable. |

Emergent behavior: LLM decides the sequence of actions. Flexible but less predictable for enterprise needs. |

Pre-defined workflows vs. autonomous agents

A related distinction is between pre-scripted (static) workflows and autonomous (dynamic) agents. Pre-defined workflows have a fixed high-level outline of steps. For example, a document processing workflow might always do “extract → classify → notify” in sequence. Autonomous agents, in contrast, determine next steps on the fly: they might decompose tasks, retry strategies, or call tools based on their reasoning. In practice, a workflow is defined in advance (though it can branch) with decision points, whereas an autonomous agent is freer to roam through its capability set. For instance, a fixed workflow could route an email through specific handlers, while an autonomous agent might decide on additional actions (like querying a knowledge base or asking clarifying questions) spontaneously. In ZBrain Builder specifically, this difference is balanced by offering both: Flows (pre-defined orchestration) and autonomous agents, specifically Agent Crew (more open-ended reasoning).

When to use which

The decision between workflows and agents depends on the task complexity, governance needs, and adaptability requirements. Agents excel in dynamic, creative, or unpredictable environments, while workflows are better suited for multi-step, high-reliability processes that require strict oversight. Often, a hybrid approach works best: flows manage structure and integrations, while agents provide flexible reasoning where needed.

|

Dimension |

Workflows |

Agents |

|---|---|---|

|

Task complexity |

Multi-stage or multi-agent pipelines, involving multiple systems. |

Single-step or highly flexible tasks that are self-contained. |

|

Governance needs |

Strong auditing, validation, and SLAs with explicit checkpoints. |

Less predictable; harder to audit or enforce strict guardrails. |

|

Adaptability |

Best when major steps are foreseeable and can be pre-planned. |

Ideal when tasks can’t be fully pre-modeled or require creativity. |

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

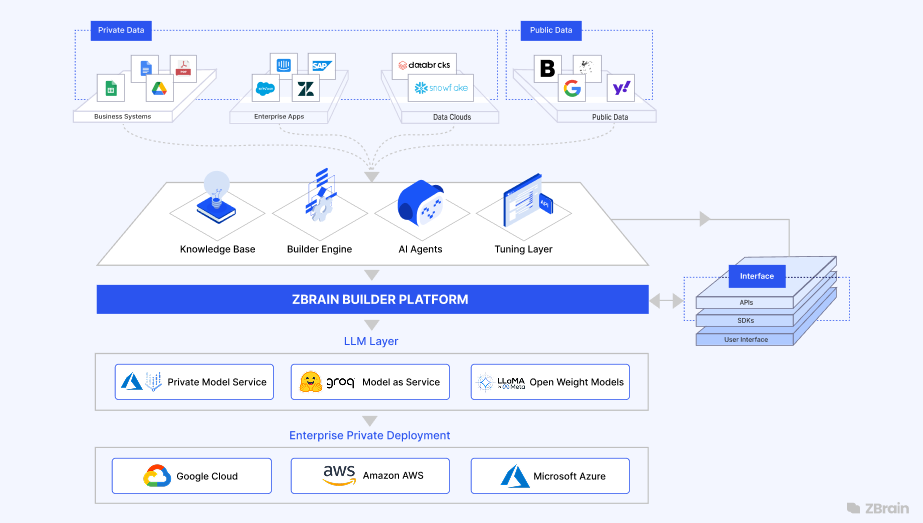

ZBrain’s unified platform

ZBrain Builder is an enterprise-grade, agentic AI orchestration platform that unifies flows, agents, data, and tools. It provides a low-code Flow interface, along with a full agent layer, knowledge management, and monitoring, all on one platform. Architecturally, ZBrain Builder is modular, ingesting enterprise data into a knowledge base, handling LLM orchestration, and running workflows or multi-agent teams on top. Key capabilities include:

- Low-code Flow interface: An intuitive canvas with pre-built connectors and components (APIs, databases, LLMs, etc.). Users can visually design complex AI pipelines without writing code, quickly linking triggers, logic, prompts and integrations. The Flow feature comes with conditional routers, loops, webhooks and more.

- AI agents & agent crews: ZBrain Builder lets you define custom agents (LLM-based handlers) that can run standalone or in coordinated Agent Crews. A supervisor agent can dynamically delegate to specialized agents within the same platform. (For example, one agent might parse requests while others perform specialized actions; see Pattern 4 below.) ZBrain Builder includes a pre-built agent library (“Agent Store”) and multi-agent orchestration support.

- Knowledge base integration: ZBrain Builder supports both vector stores and graph indexes as knowledge bases (KB) to store enterprise data. The knowledge base is storage agnostic. All agents and Flows can query this KB for context (via retrieval-augmented generation), ensuring reasoning is grounded in the latest enterprise information.

- Model-agnostic LLM layer: ZBrain Builder is LLM-agnostic, integrating with both cloud APIs (OpenAI, Anthropic, Google Vertex, etc.) and private on-prem LLMs.

- Governance & monitoring: Built-in security (roles/permissions, SSO) and governance (guardrails, audit logs, versioning Flows) are standard. ZBrain Builder offers dashboards and logs for end-to-end traceability, allowing every Flow run, app, and agent action to be monitored with metrics (token usage, latencies, and outcomes) for troubleshooting and optimization over time.

In summary, ZBrain Builder’s unified platform enables CXOs and tech leads to build and manage AI workflows and agents simultaneously. Teams can leverage its modular building blocks – data connectors, processing pipelines, LLM orchestration, and UI integration – to build tailored AI apps and agents that interoperate with existing systems. This all-inclusive approach avoids fragmentation: users have one place to create, test, deploy, and govern Flows, agents and apps, reducing complexity, streamlining development and governance across the enterprise.

Workflow patterns used in ZBrain Builder

Prompt chaining

- General concept: Prompt chaining decomposes a complex task into a sequence of simpler LLM calls. Each step in the chain performs a subtask: the output of one prompt becomes the input to the next. This linear pipeline structure makes each LLM call easier and more reliable. You can insert logic checks or gating between steps to verify progress. Prompt chaining is particularly useful for tasks that would be too large or complicated for an LLM to handle efficiently with a single prompt. For example, a long document question-answering task might first extract relevant quotes, then feed those quotes into a second prompt to generate a summary answer. This stepwise breakdown also improves transparency and debuggability: you can inspect the intermediate results of each prompt, isolate problems, and optimize prompts stage-by-stage.

- When to use: Use prompt chaining when the goal can be clearly split into ordered subtasks. It’s ideal if each subtask builds on the last. For instance, creating a marketing email could be chained as “draft bullet points → refine language → translate to another language”, as one example. The main trade-off is latency (multiple LLM calls) for better accuracy and control. If your use case can be cleanly modeled as step A then step B then step C, prompt chaining is a good fit. It also works well when you want to incorporate intermediate validation, such as generating an outline, checking it against criteria, and then generating final content.

- ZBrain implementation: ZBrain’s low-code Flow interface natively supports prompt chaining. In a Flow, you simply add multiple LLM (prompt) nodes in series: the output of each node is automatically passed as the input to the next. This is done with Flow components – just connect the components in sequence, and ZBrain handles data passing. You can intersperse conditional checks or error handling between nodes if needed. For example, after one prompt, you can insert a decision node that verifies the output before proceeding. Each prompt node can utilize any integrated LLM (e.g., GPT-5, Claude), allowing you to chain different models if desired. Because the Flow is visual, you can easily see the chain and inspect each step’s output in ZBrain’s execution logs.

- Integration with tools: In ZBrain Flows, a chained prompt can interact with external data or tools between steps. For instance, one prompt’s output could query a knowledge base (RAG) or trigger a database lookup before feeding into the next prompt. You could build a two-step chain where the first LLM call generates a database query string, a second node runs that query, and a third node prompts to summarize the results.

- Benefit: The benefit of prompt chaining is modularity and control. It breaks down complexity, making each prompt more reliable and transparent. Users can iterate on each stage independently. In enterprise use, this translates to faster debugging and compliance: you know exactly which sub-step failed or produced questionable output. It also often improves overall answer quality by guiding the LLM through a well-defined process. In summary, prompt chaining boosts the transparency of your LLM application, increases controllability and reliability, enabling teams to tune and monitor each step of the AI workflow.

Routing

- General concept: Routing involves classifying an input and directing it to a specialized path. Think of it like a dispatcher: first, an LLM (or classification model) examines the request to determine its category, then it routes the request based on conditional logic. This allows you to build more specialized, fine-tuned sub-workflows for each type of request. For example, a customer support workflow might first route queries into “billing,” “technical,” or “general” buckets and then invoke different handling prompts. Or another router might send “easy/common” tasks to a lightweight model and “hard/unusual” tasks to a more powerful model. The main benefit is the separation of concerns: each branch can be optimized without affecting the others.

- When to use: Use routing when your overall task spans distinct categories or domains that merit different handling. If inputs vary widely (different intents, topics, or required tools), routing helps ensure each case gets the best validation. For example, a multi-domain assistant (e.g., finance, HR, IT) would use routing so that finance-related questions are directed to the finance-trained prompt, and so on. In general, routing is ideal when classification can be done accurately (via a quick LLM prompt or a traditional classifier), and the downstream categories are well-defined.

- ZBrain implementation: ZBrain’s Flow supports routing through the Router or conditional nodes. You can add a “Router” component, which evaluates a series of if/then conditions (e.g., based on keywords or previous LLM output) and splits the flow into branches. By default, it provides “Branch 1” and “Otherwise” paths, and you can configure any number of branches with custom criteria. For intelligent routing, you could have an initial prompt node that classifies the input (e.g., using an LLM with a system prompt to assign a category). Then the Flow moves into different sub-flows based on the result. Each branch can contain further AI tasks or API calls specific to that category. Because ZBrain Flows allow arbitrary branching and loops, you can also build hierarchical routers: e.g., primary categorization followed by more granular routing. The end result is a tree of specialized paths. For example, one could implement an internal “Intent Classifier” prompt in a Flow and then use its output to conditionally route the data to one of several assistant prompts (customer support agents or analysis tools).

- Integration with tools: In the routing pattern, each branch can leverage different integrations. One branch might call an external CRM API, another might query a knowledge base or trigger a microservice. Because routing in ZBrain is just flow logic, you can mix prompt nodes with any built-in component (databases, search, vector lookup, etc.) per branch.

- Benefit: The main benefit of routing is specialization and robustness. By isolating different inputs into their own branches, you avoid the “one-size-fits-all” problem. Each sub-workflow can be tuned and scaled independently. This often improves accuracy (since the right prompt/model is used) and efficiency (lighter cases hit faster paths). It also makes the system more maintainable: new categories can be added as new branches without changing others. In summary, routing works well for complex tasks where distinct categories are better handled separately, enabling optimized expert flows for each category.

Parallelization

- General concept: Parallelization (also called fan-out/fan-in or ensembling) runs multiple AI tasks simultaneously on the same input or subtasks, then combines their results. There are two key styles: sectioning, where a big task is split into independent pieces run in parallel (e.g., different sections of a report); and voting/ensembling, where the same task is sent to multiple LLM instances (possibly with varied prompts or models) and the outputs are aggregated (e.g. by majority vote or confidence). For example, you might fan-out a single query to 3 different prompts (or models) and then choose the most confident answer among them. Or for long content, you might consider parallelizing by chunking the content into parts and processing each part simultaneously. The advantage is increased throughput and robustness: parallel tasks complete faster, and multiple perspectives can improve quality.

- When to use: Use parallelization when tasks can be split or where multiple opinions add value. If subtasks are truly independent (like processing distinct pages of a document), sectioning accelerates processing. If you need to improve confidence or cover different angles, use voting. For example, code review often benefits from running several different code-audit prompts in parallel and flagging any issues anyone catches. It’s also useful for complex tasks where one run might miss something; multiple parallel attempts reduce risk. The main consideration is cost and latency: running N models in parallel costs more tokens and resources, so it’s best for high-value tasks or where speed and coverage justify it.

- ZBrain implementation: ZBrain’s Flow can easily orchestrate parallel branches. In the Builder, you can design a Flow where a single trigger leads to multiple parallel nodes (branch the Flow without waiting for one to finish). For example, from one input, you could fork into two LLM nodes with the same or different prompts, each executing concurrently. Because ZBrain Flows allow asynchronous branches, you might have one branch process the core task while another branch simultaneously runs a compliance check or data validation on the same input. Agent crews can also execute tasks in parallel: a supervisor can dispatch multiple workers simultaneously and then gather their answers.

- Integration with tools: In parallel Flows, each branch can also use different tools. For example, one branch might fetch customer data from a database while another calls a semantic search on the knowledge base, and a third runs an LLM prompt. All can run concurrently, then their outputs can feed into a final consolidation prompt. This is simply a matter of connecting different component nodes in parallel. The ZBrain Agent Crew can execute multiple agents concurrently, enabling parallel task processing. During execution, it can invoke external tools via MCP and seamlessly integrate with internal enterprise systems for enhanced workflow automation. ZBrain’s capacity to spawn multiple parallel tasks (whether Flows or agent calls) means you can leverage all your data sources and LLMs at once.

- Benefit: Parallelization boosts throughput and resilience. It lets you cover more ground by exploring multiple hypotheses or data slices simultaneously. If one path fails or returns low confidence, others can succeed. This pattern can notably improve quality: for instance, multiple independent reviews of content can catch more errors. It also reduces latency: independent subtasks complete in the time of the slowest branch rather than the sum. The trade-offs (higher cost, increased complexity in merging) can be managed in ZBrain Builder through metrics and branch control (like loops). Overall, parallel Flows make AI workflows more robust and performant for high-stakes or high-volume tasks.

Orchestrator-worker

- General concept: The orchestrator-worker pattern (also known as supervisor-worker or planner-worker) features a central orchestrator agent (LLM) that dynamically decomposes a complex goal into subtasks and dispatches them to specialized subordinate agents. The orchestrator essentially acts as a planner: it receives the high-level objective, reasons about which steps are needed (and in what order), assigns sub-goals to subordinates, and then finally integrates the results. Subordinates/ child agents are task-focused LLM agents or Flows, each expert in a specific function. This pattern is used when subtasks cannot all be known in advance and must be discovered at runtime. It offers maximum flexibility: the orchestrator can create, re-order or repeat tasks as needed based on the evolving context. This is akin to how a project manager (or lead agent) might oversee multiple team members.

- When to use: Use orchestrator-worker for very complex, multi-step tasks where you can’t prespecify all steps. Examples include multi-file coding problems, large research analysis, or strategic planning, where the scope of work is unclear upfront. It’s ideal when adaptability is required – e.g., a procurement process where subsequent tasks depend on intermediate results. The key sign is that the sequence of operations is not static but must be discovered by an AI in context. This pattern is more flexible than parallelization because the orchestrator decides subtask granularity on the fly. The trade-off is complexity in coordination: you need careful design to avoid infinite loops or inefficiency.

- ZBrain implementation (Agent Crew): ZBrain’s Agent Crew feature is tailor-made for orchestrator-worker workflows. You define a supervisor agent and multiple child agents within the same crew. The supervisor’s prompt logic decides how to delegate. For example, using ZBrain’s crew designer (Define Crew Structure), you might set up a supervisor agent that parses the input and assigns subtasks to child agents specialized in search, summarization, code writing, etc. ZBrain Builder’s low-code interface lets you visually map these roles and the data flow between them. Critically, ZBrain Builder supports parallel or sequential execution based on task dependencies: a supervisor can dispatch child agents concurrently or wait for one to finish before triggering the next, all within one orchestrated flow. The platform also supports LangGraph or other orchestration frameworks, meaning you can enable stateful reasoning and memory across the crew. Each child agent can access the knowledge base or external tools as part of its role. Once all child agents report back, the orchestrator (supervisor) collects their outputs and produces the final result. Importantly, the entire crew is governed by a single workflow, ensuring end-to-end traceability.

- LangGraph and stateful orchestration: ZBrain Builder allows you to choose an orchestration framework (e.g., LangGraph or Google ADK or Semantic Kernel) when building a crew. LangGraph, for example, enables agents to have longer chains of memory or contextual linking between steps. This means that state (like intermediate facts or user context) can be passed through the team. In practice, this ensures that an agent crew can maintain continuity: the supervisor can recall what each child agent did and use that information in further planning, while each child agent can recall prior tasks if needed. This stateful orchestration is key for complex workflows.

- Tool integrations for workers: Each worker agent in the crew can use its own tools and data connections. For instance, one child agent might be equipped with an API toolkit to fetch data, while another might have a built-in knowledge graph. The crew configuration allows you to assign which tools or a Flow each agent can call. For example, in a legal review crew, one agent could have a PDF parser and legal database access, while another might query case law via a search connector. ZBrain’s multi-agent design means these tools integrate seamlessly: calls to external systems from any agent are made through the platform’s secure MCP connectors.

- Monitoring and traceability: Orchestrator-worker workflows are complex, but ZBrain Builder provides strong observability. The platform logs each agent’s actions and intermediate data. You get an Agent Dashboard to watch the crew’s input queue, outputs of each agent, and the supervisor’s decisions. This means that at any point, you can see which subtasks were created, which agents handled them, and where any errors occurred. A performance dashboard displays execution metrics, token counts, and satisfaction scores if you have set up feedback. ZBrain also lets you enable human-in-the-loop checkpoints in a crew flow, so an expert can review or override results before the crew proceeds. All this ensures the orchestrator-worker pattern stays transparent and governable.

Evaluator-optimizer

- General concept: The Evaluator-optimizer pattern sets up a feedback loop between two agents (or prompts). One agent (the optimizer or producer) generates a solution; another agent (the evaluator or critic) assesses that solution against criteria and provides feedback or a score. The optimizer can then refine its output based on the critique, iteratively improving the result. This mimics how a human might write a draft and then self-review or get peer review to polish it. Anthropics describes it as “one LLM call generates a response while another provides evaluation and feedback in a loop.” The loop continues until the evaluator is satisfied or improvement reaches a threshold.

- When to use: Use evaluator-optimizer when you have clear objectives for quality, and iterative improvement is valuable. This is effective if (a) human feedback would clearly improve an answer, and (b) the LLM is capable of mimicking that feedback. Good examples include tasks like nuanced translations, creative writing edits, or any output that benefits from refinement. For instance, in literary translation, the initial renderings might miss subtleties; an evaluator agent could spot those nuances and ask the optimizer to tweak the translation. It’s also useful in complex searches: you generate findings, then an evaluator decides if more searching or analysis is needed. The pattern is akin to reinforcement learning or RLHF in spirit (but done with LLM loops instead of gradient updates). One caution is that it adds latency (multiple calls per iteration) and cost, so it’s best suited for high-importance outputs where precision is crucial.

- ZBrain implementation: In ZBrain Builder, an evaluator-optimizer can be built by combining Flows and agents. You might implement this as a Flow loop: a prompt node generates an output, then another prompt node critiques it. The original prompt node can then be repeated with revised instructions (including the critique). Alternatively, use a “optimizer agent” and an “evaluator agent” in a crew: the supervisor could run the optimizer agent to draft an output, then route that output to the evaluator agent; if the score is low, the supervisor tells the optimizer to try again with an adjusted prompt. ZBrain’s Flow logic supports loops and conditional repeats, so this cycle can be automated with a termination condition (for example, until the evaluator gives a high score or a max number of turns). In practice, you might configure a flow where each draft is logged, and the evaluator’s feedback is captured in the logs.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

ZBrain Builder’s architecture and best practices

ZBrain Builder’s architecture is modular and layered. Key modules include data ingestion (connectors and pipelines), a knowledge base (vector DB and knowledge graph), an extended DB, an LLM orchestration layer (model-agnostic – consisting of open source, private, model as a service and open weighted models), the workflow/agent layer (Flows and Agent layer), and the interface integration layer (API, SDK, and UI). Each layer can be scaled or swapped independently: for example, you can replace the vector store without reworking the workflow, or switch LLM providers without changing the flow logic. This flexibility is best practice for enterprise AI: keep components decoupled so you can upgrade models, tools, or data stores as needed.

Best practices for using ZBrain Builder include:

- Leverage the knowledge base: Ingest enterprise data into ZBrain’s KB so all agents, apps and Flows can retrieve context. Ensure data is well-curated: ZBrain provides ETL and chunking for documents, but you should review indexing strategies (vector vs graph search) to match your use case. Well-tuned knowledge bases enhance accuracy and enable LLMs to provide answers based on grounded data, enabling them to perform more effectively.

- Utilize low-code interface Flows for version control and repeatability: Always develop new AI logic as a ZBrain Flow (or Crew) rather than ad hoc code. The visual Flow canvas helps document each step. Keep version history and test runs (ZBrain tracks Flow versions and logs) so you can revert or audit changes.

- Incorporate guardrails and checks: Even in automated workflows, add explicit validation steps. For example, after a critical LLM call, use a second prompt or an external service to verify outputs. ZBrain’s conditional and delay components let you implement retries or human checkpoints. Don’t rely on the model to always self-correct; use ZBrain’s orchestration to enforce policies (e.g., if a response fails a policy check, re-route it to a human or loop). Enable guardrails in apps and for agents, and add guardrails as a prompt.

- Balance Flows vs Agents: Use agents (including Agent Crews) where tasks need autonomy or multi-agent collaboration; use Flows to handle structured logic and integrations. For many enterprise problems, a hybrid approach is effective: for example, a human workflow might trigger an agent, whose result then flows into downstream processes. ZBrain makes this easy since Flows and agents share the same platform.

- Monitor and iterate: Take advantage of ZBrain’s built-in monitoring feature. Use the dashboard to watch agent behaviors and Flow metrics. When you notice performance issues or edge cases, adjust prompts or flow logic accordingly. ZBrain’s evaluation suite consists of a monitor module, which allows you to benchmark agents continuously. For example, schedule periodic tests of key flows and analyze failures. Continuously refine prompts (e.g. by fine-tuning guardrails or system messages) based on observed outcomes.

- Governance and security: Apply role-based access to Flows and agent crews so only authorized users can modify or trigger them. Use ZBrain’s guardrails (pre-defined rules) to prevent unsafe outputs. Log all agent decisions for audit. Compliance is a priority: do not skip implementing approval steps in the workflow if required by policy.

- Maintain a human-in-the-loop approach where needed: Even fully automated workflows can benefit from periodic human review. ZBrain Flows can pause for user feedback and feed that back into the agent refinement loop. For critical tasks, insert review stages to allow humans to validate AI suggestions. This ensures errors do not propagate unchecked and aligns with enterprise quality standards.

By following these principles and leveraging ZBrain’s comprehensive tooling, organizations can design robust AI workflows that are traceable, adaptable, and continuously improving. The modular architecture lets teams start small (e.g., a single flow or agent) and expand into multi-agent Crews and multi-step pipelines as confidence grows. This balanced approach – combining the creativity of autonomous agents with the stability of orchestrated flows – enables enterprises to scale AI automation from pilot to production while maintaining control and governance.

Endnote

As enterprises scale their AI initiatives, the ability to orchestrate tasks through well-structured workflows becomes just as important as the intelligence of the models themselves. Pre-defined flows provide governance, traceability, and integration, while autonomous agents contribute reasoning and adaptability. ZBrain Builder bridges these two worlds with a unified platform where flows, agents, and orchestration patterns work in harmony.

By supporting prompt chaining, routing, parallelization, orchestrator-worker coordination, and evaluator-optimizer feedback loops, ZBrain Builder equips organizations with the flexibility to model both predictable processes and dynamic problem-solving. The result is a system that not only accelerates automation but also ensures reliability, context-awareness, and continuous improvement.

In an era where AI must be both powerful and trustworthy, ZBrain Builder enables enterprises to design workflows that are intuitive for teams, efficient in execution, and adaptable to evolving business needs—transforming automation into a strategic advantage.

Discover the power of flow-driven and agentic orchestration with ZBrain apps and agents to accelerate automation and decision-making at scale. Contact us for a demo!

Listen to the article

Author’s Bio

An early adopter of emerging technologies, Akash leads innovation in AI, driving transformative solutions that enhance business operations. With his entrepreneurial spirit, technical acumen and passion for AI, Akash continues to explore new horizons, empowering businesses with solutions that enable seamless automation, intelligent decision-making, and next-generation digital experiences.

Table of content

Frequently Asked Questions

What is AI workflow automation, and why is it important for enterprises?

AI workflow automation refers to the process of orchestrating multiple AI tasks—such as LLM prompts, data retrieval, API calls, or approvals—into structured, repeatable workflows. Instead of treating each AI output as an isolated event, workflows chain tasks together with logic, validation, and decision points to create a cohesive process. For enterprises, this reduces manual intervention, minimizes errors, ensures compliance, and accelerates outcomes. It turns AI from an ad-hoc tool into a strategic system that can handle complex, end-to-end business processes.

How do AI agents differ from workflows?

AI agents are autonomous entities that can reason, make decisions, and act dynamically, often using LLMs as their “brains.” They are well-suited for open-ended, creative, or unpredictable tasks. Workflows, on the other hand, explicitly encode the steps, tools, and conditions involved in a process. They provide predictability, traceability, and governance. The two are complementary: agents excel at adaptability, while workflows excel at control. In enterprise contexts, combining them ensures both innovation and reliability.

What orchestration patterns does ZBrain Builder support?

ZBrain Builder provides a library of orchestration patterns that can be combined to model diverse enterprise scenarios:

-

Prompt chaining for step-by-step transformations.

-

Routing for directing queries to the right sub-flow or model.

-

Parallelization for faster, multi-branch execution.

-

Orchestrator-worker for planner/worker agent structures.

-

Evaluator-optimizer for feedback-driven refinement.

These patterns can be designed visually in the Flow or implemented via multi-agent crews, offering flexibility for both predictable processes and dynamic reasoning.

How does ZBrain ensure governance and compliance in AI workflows?

Governance is built into ZBrain at multiple layers. Workflows explicitly encode decision points, so every step is transparent and auditable. Role-based access controls, versioning Flows, and detailed run logs ensure compliance with enterprise standards and regulations. Guardrails and validation nodes can be embedded directly into flows as a part of the prompt, preventing unsafe outputs from propagating downstream. Additionally, Human-in-the-Loop (HITL) controls allow teams to pause, review, and approve results at critical points, combining automation with oversight.

What role does the knowledge base play in ZBrain workflows?

The knowledge base is central to grounding LLM outputs in enterprise data. ZBrain supports both vector stores for unstructured information (documents, PDFs) and knowledge graphs for structured relationships. During workflows, agents or prompts can query the knowledge base to ensure responses are factually aligned with organizational context. This reduces hallucinations and enhances precision.

How does ZBrain Builder handle multi-agent orchestration?

ZBrain’s Agent Crew feature enables orchestrator-worker setups where a supervisor agent plans tasks and delegates them to specialized worker agents. Each agent can have its own model configuration, tools, and MCPs (Model Context Protocols). Crews can run sequentially or in parallel depending on dependencies. Import/export capabilities ensure crews can be easily shared or migrated across environments. This design makes it easy to scale from single-agent use cases to complex multi-agent systems.

What are the business benefits of Human-in-the-Loop (HITL) in workflows?

HITL controls let enterprises introduce checkpoints where AI outputs must be validated before continuing. This balances automation with trust. For example, in a legal contract review workflow, an agent might extract clauses and propose risk ratings. HITL enables a user to review and adjust results before they are propagated to reporting systems. This reduces risk, enforces compliance, and builds user confidence in AI-driven processes. HITL is especially valuable in regulated industries such as finance, healthcare, and legal.

How does ZBrain improve search and retrieval accuracy in workflows?

ZBrain enables document-level chunking, which improves document chunking by splitting content contextually (e.g., by section or table row) rather than arbitrarily. It also supports OCR for scanned files and structured parsing for tabular data. When paired with vector or graph retrieval, this ensures agents and workflows access the most relevant and accurate content. For enterprises, this means less information loss, richer context grounding, and more precise answers from AI systems.

How do these workflow capabilities translate into business value?

By combining structured workflows with autonomous agents, ZBrain Builder enables enterprises to:

-

Increase efficiency by automating repetitive tasks with precision.

-

Reduce risk through governance, guardrails, and HITL validation.

-

Improve decision-making with context-aware, reliable outputs.

-

Scale AI adoption across departments with reusable Flows and agent crews.

Ultimately, these capabilities turn AI from experimental pilots into enterprise-ready solutions that align with ROI, compliance, and scalability goals.

How do we get started with ZBrain for AI development?

To begin your AI journey with ZBrain:

-

Contact us at hello@zbrain.ai

-

Or fill out the inquiry form on zbrain.ai

Our dedicated team will work with you to evaluate your current AI development environment, identify key opportunities for AI integration, and design a customized pilot plan tailored to your organization’s goals.

Insights

A guide to intranet search engine

Effective intranet search is a cornerstone of the modern digital workplace, enabling employees to find trusted information quickly and work with greater confidence.

Enterprise knowledge management guide

Enterprise knowledge management enables organizations to capture, organize, and activate knowledge across systems, teams, and workflows—ensuring the right information reaches the right people at the right time.

Company knowledge base: Why it matters and how it is evolving

A centralized company knowledge base is no longer a “nice-to-have” – it’s essential infrastructure. A knowledge base serves as a single source of truth: a unified repository where documentation, FAQs, manuals, project notes, institutional knowledge, and expert insights can reside and be easily accessed.

How agentic AI and intelligent ITSM are redefining IT operations management

Agentic AI marks the next major evolution in enterprise automation, moving beyond systems that merely respond to commands toward AI that can perceive, reason, act and improve autonomously.

What is an enterprise search engine? A guide to AI-powered information access

An enterprise search engine is a specialized software that enables users to securely search and retrieve information from across an organization’s internal data sources and systems.

A comprehensive guide to AgentOps: Scope, core practices, key challenges, trends, and ZBrain implementation

AgentOps (agent operations) is the emerging discipline that defines how organizations build, observe and manage the lifecycle of autonomous AI agents.

Adaptive RAG in ZBrain: Architecting intelligent, context-aware retrieval for enterprise AI

Adaptive Retrieval-Augmented Generation refers to a class of techniques and systems that dynamically decide whether or not to retrieve external information for a given query.

How ZBrain breaks the trade-offs in the AI iron triangle

ZBrain’s architecture directly challenges the conventional AI trade-off model—the notion that enhancing one aspect inevitably compromises another.

ZBrain Builder’s AI adaptive stack: Built to evolve intelligent systems with accuracy and scale

ZBrain Builder’s AI adaptive stack provides the foundation for a modular, intelligent infrastructure that empowers enterprises to evolve, integrate, and scale AI with confidence.