A comprehensive guide to AgentOps: Scope, core practices, key challenges, trends, and ZBrain implementation

Listen to the article

Enterprises are entering the era of agentic AI, where intelligent systems no longer just automate tasks — they reason, plan and act autonomously. Powered by large language models (LLMs), AI agents can analyze context, make decisions, invoke tools and collaborate with other agents or humans to achieve outcomes once reserved for manual workflows. This evolution is redefining enterprise productivity, marking a clear shift from automation to autonomy.

The market data underscores this transformation. The global AI agents market, valued at $5.26 billion in 2024 growing at an extraordinary 46.3% CAGR. According to Futurum Research’s 2025 market overview of agentic AI platforms, 89% of CIOs now rank agent-based AI as a top strategic priority for productivity and workflow automation.

Yet autonomy introduces complexity. Unlike static automation, agents learn, adapt and evolve — which means they can behave unpredictably. Their nondeterministic decision-making, tool dependencies and continuous evolution raise pressing questions around reliability, governance and safety. How do you monitor a system that reasons on its own? And how do you ensure autonomous agents remain efficient, compliant and cost-effective at scale?

This is where agent operations (AgentOps) becomes indispensable. AgentOps is the emerging discipline for managing, monitoring, evaluating and optimizing AI agents throughout their lifecycle — from design and orchestration to observability and continuous improvement. Much like DevOps and MLOps transformed software and model operations, AgentOps provides the operational backbone for autonomous systems, ensuring they are explainable, measurable and safe.

Adoption is accelerating rapidly. According to Futurum Research, 12–18% of organizations have already formalized AgentOps practices, especially in regulated sectors, advanced AI labs, and digital-native enterprises—driven largely by mission-critical use cases such as customer service automation, FinOps, threat analysis, and R&D co-pilot workflows. Another 45% of large companies plan to pilot AgentOps workflows within the next 18 months.

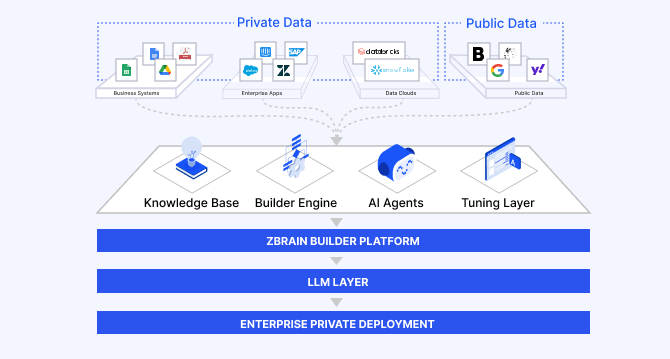

Agentic AI orchestration platforms such as ZBrain Builder are defining how enterprises operationalize this paradigm. ZBrain Builder delivers enterprise-grade agent orchestration, monitoring and evaluation, providing real-time visibility into agent behavior, performance metrics, costs and compliance. In this article, we explore how AgentOps is evolving as a critical enterprise discipline — and how ZBrain Builder capabilities empower organizations to build, deploy, monitor and optimize autonomous agents with confidence, precision and measurable ROI.

What is AgentOps? A deep dive into principles and practices

The evolution of AI has progressed from static, rule-based systems to autonomous agents capable of perceiving, reasoning, acting and learning. These intelligent entities, often leveraging large language models, memory modules and tool-use capabilities, represent the next stage of enterprise automation — where AI doesn’t just execute tasks but makes contextual decisions to achieve defined outcomes.

However, this autonomy introduces an entirely new operational paradigm. Traditional DevOps and MLOps frameworks were designed for deterministic systems, where software behaves predictably and traditional ML models follow consistent inference patterns. AI agents, in contrast, are dynamic, context-aware and nondeterministic. They reason independently, interact with APIs and humans, and continuously evolve based on feedback and new data.

What is AgentOps?

AgentOps (agent operations) is the emerging discipline that defines how organizations build, observe and manage the lifecycle of autonomous AI agents. It extends the operational philosophies of DevOps, MLOps and LLMOps into a new frontier — one where software components can reason, act and adapt independently.

It establishes a structured framework for monitoring, evaluating, debugging, securing and governing agentic systems. AgentOps ensures that agent behavior remains explainable, measurable and aligned with business and compliance objectives. Just as DevOps unified development and operations, and MLOps standardized the deployment of machine learning models, AgentOps brings the same operational rigor to intelligent autonomy.

Why AgentOps is needed — and how it extends DevOps and MLOps

As enterprises accelerate the adoption of agentic AI to automate workflows, generate insights and make contextual decisions, complexity scales exponentially. Unlike conventional software, AI agents are dynamic and adaptive — they learn from context, collaborate with other agents and act without explicit human direction.

This autonomy introduces new challenges: unpredictable reasoning, external dependencies, security vulnerabilities, compliance risks and rising operational costs. Traditional DevOps and MLOps pipelines were never designed for such stochastic, evolving behavior.

AgentOps fills this gap. It provides a continuous operational framework that integrates observability, evaluation, governance, feedback, security, resilience and versioning across every stage of an agent’s lifecycle. By doing so, it transforms autonomous AI from a research experiment into a reliable, accountable and scalable enterprise layer.

AgentOps sits at the intersection of modern operational disciplines:

- DevOps optimizes software delivery and integration.

- MLOps ensures model reliability and lifecycle management.

- LLMOps standardizes operations for large language models.

- AgentOps now extends this lineage to intelligent, adaptive systems that can reason, plan and act autonomously.

How AgentOps helps

- Comprehensive observability across agent behavior, user interactions, reasoning steps, external API calls and tool usage — all in one operational layer.

- Real-time monitoring to detect anomalies as sessions unfold and allow teams to intervene proactively.

- Cost control and budgeting with visibility into token usage, API expenses and alerts when costs spike or exceed defined thresholds.

- Failure detection that pinpoints whether errors originate from agent logic, external tools or upstream dependencies.

- Tool and API usage analytics showing which tools were invoked, how often and at what cost.

- Session-wide metrics including latency, token consumption, success rate and overall execution cost for each agent session.

- Operational safety and compliance, ensuring agents remain efficient, predictable, secure and aligned with organizational expectations.

The need for operational visibility

AgentOps frameworks provide the visibility required to answer questions such as:

- Did the agent reference the correct knowledge source?

- Which APIs were used, and what were the latency and cost per call?

- What was the token usage and overall cost per interaction with LLM?

- Did the agent comply with security and privacy requirements?

- How did it handle unexpected inputs or failures?

- How effectively did it collaborate with other agents or human operators?

AgentOps delivers session replays, tool-usage analytics, latency and cost metrics, and traceable reasoning chains that reveal how decisions were made. This visibility forms the foundation for continuous improvement, cost optimization and operational safety.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

The core principles of AgentOps: Building trust and control in autonomous AI

At its core, AgentOps is built on seven interconnected principles that ensure autonomy remains reliable, compliant and measurable. Observability drives evaluation, which in turn informs optimization, governance, and security, thereby enforcing trust. Feedback and resilience maintain adaptability, while versioning ensures traceability and accountability. Together, they turn agentic AI from an experimental concept into a production-grade discipline capable of running business-critical operations with predictability and accountability.

1. Observability: Making the invisible visible

The cornerstone of AgentOps is observability — the ability to make the behavior of an autonomous agent fully transparent. Unlike traditional logging, which captures isolated events, observability traces how an agent processes inputs, calls tools and produces outputs over time.

This includes:

- Comprehensive signal capture: Recording every input received (e.g., a customer’s query), meticulously tracing each step of its reasoning process (e.g., how it interpreted the intent, what internal thoughts it generated), documenting specific external tool calls (e.g., querying a CRM, accessing a knowledge base) and their precise results, and capturing all intermediate outputs as well as the final response. Each action, from a tool invocation to a model’s “thought” sequence, becomes traceable.

- Artifact-level tracing: AgentOps distinguishes between design-time artifacts (e.g., prompt templates, tool definitions, model configurations) and runtime artifacts (e.g., generated goals, evolving plans, final outputs). Tracking both provides complete visibility into how static design interacts with dynamic execution.

- Telemetry on execution: Capturing metadata such as timestamps, duration, status, error types, token usage, API latency and cost for each reasoning step.

The objective of observability is to turn agent behavior from a black box into an explainable and auditable process. This level of visibility is indispensable for debugging, performance optimization, root-cause analysis and maintaining operational safety and compliance.

2. Evaluation and optimization: Continuously sharpening performance

Observation becomes meaningful only when paired with structured evaluation. Evaluation defines how an agent’s success is measured; optimization uses those insights to improve efficiency, accuracy and cost-effectiveness over time. It involves refining prompts, adjusting workflow logic, tightening guardrails, and improving task structures based on measurable insights.

Key aspects include:

- Final response evaluation: Assessing the correctness, relevance and adherence of the agent’s final answer or action to defined criteria.

- Step-by-step evaluation: Analyzing decisions, tool calls and intermediate actions to verify that each step is appropriate and follows expected patterns.

- Trajectory evaluation: Examining the full sequence of actions and tool calls to determine whether the agent followed a safe, efficient path to its outcome — not just whether the outcome was acceptable.

When these assessments run continuously, they reveal trends such as quality drift, recurring failure modes, unexpected latency spikes or rising operational costs. These insights guide practical optimization choices: refining prompts, restructuring workflows, rethinking escalation logic or improving evaluation criteria. Through this feedback loop, agents evolve in a controlled, measurable way — maintaining accuracy, reliability and operational predictability as requirements change.

3. Governance: Implementing responsible autonomy with guardrails

As agents act more independently, governance ensures that their autonomy remains aligned with organizational intent. It is the ethical, regulatory and procedural backbone of AgentOps — ensuring that intelligent systems act safely, fairly and accountably.

- Proactive guardrails: Define the limits of acceptable behavior before deployment. These guardrails govern what inputs agents can process, which APIs they can access and how outputs must conform to ethical and compliance standards.

- Policy and compliance alignment: Ensuring agents operate within applicable privacy laws (GDPR, HIPAA), corporate policies and external regulatory expectations, with auditable behavior and transparent reasoning paths. Governance ensures auditability, fairness and transparency across all actions.

- Fallback and escalation paths: For ambiguous or high-risk cases, governance frameworks provide mechanisms for safe defaults, graceful degradation or human-in-the-loop escalation — ensuring accountability at every decision point.

- Audit-ready behavior: Maintaining clear records of guardrail checks, actions taken and outcomes so organizations can demonstrate how safety and compliance are enforced.

With strong governance, organizations can allow meaningful autonomy while retaining control, accountability and compliance.

4. Feedback: Maintaining continuous alignment

Feedback is the indispensable mechanism in AgentOps that closes the communication loop between users, developers and the agents themselves. It is the lifeblood of an agent’s continuous evolution, ensuring that its learning and adaptation remain precisely aligned with organizational intent and evolving business objectives. Without effective feedback channels, autonomous agents risk drifting into misaligned or undesirable behaviors.

- Explicit human feedback: This involves direct user input, providing high-quality, targeted insights. Examples include simple ratings (e.g., thumbs up/down), detailed qualitative comments on agent outputs, specific annotations on problematic actions or direct corrections to agent responses.

- Implicit human feedback: This captures indirect measures of user behavior, offering high-quantity, real-world signals. Examples include the time a user spends engaging with an agent’s output, click-through rates on suggested links, whether a user accepts or rejects a generated piece of content, or subsequent user actions that indicate satisfaction or dissatisfaction.

- Automated telemetry: System-generated data provides objective insights into agent performance, including error rates, latency spikes, resource consumption patterns, and the frequency of specific tool calls.

- Human-in-the-loop (HITL) safeguards: HITL is a core feedback mechanism in AgentOps, ensuring that agents can pause, request clarification or hand off decisions to a human reviewer when uncertainty or risk is detected. HITL checkpoints play a critical role in high-stakes or compliance-sensitive workflows, preventing undesired actions and keeping the system firmly under human oversight.

- Comparative experiments: A/B testing of different prompts, flows or models to see which configuration performs better in real use.

- Self-evaluation mechanisms: Agents can be designed to perform basic self-checks using a secondary LLM pass or validator prompt — verifying completeness, checking format adherence, identifying ambiguity, or flagging obvious errors — before returning their output. While not a substitute for human oversight, these internal checks help reduce trivial errors.

The collected feedback is not merely stored; it is systematically integrated to drive tangible improvements in the agent’s performance and equip developers with actionable insights for refinement.

5. Resilience: Keeping agentic workflows stable and robust under uncertainty

Resilience ensures that autonomous agents remain reliable even when real-world conditions are imperfect. Instead of trying to eliminate every possible error, resilient systems are designed to recover gracefully, avoid runaway behavior and maintain safe, predictable operation under uncertainty.

Resilience practices include:

- Prompt and input robustness: Agents must be capable of handling unclear or incomplete inputs without collapsing the workflow. This includes asking clarifying questions, applying safe default behaviors or escalating when the intent cannot be resolved — preventing abrupt or misleading outputs.

- Tolerance for external tool and API failures: Real-world API dependencies may return errors, timeouts or invalid responses. Resilient agents implement bounded retries, backoff strategies and safe fallbacks so the agent does not repeatedly call a failing tool or create runaway loops.

- Graceful degradation: A core tenet of resilience, ensuring that if certain components fail or perform suboptimally, the agent system can continue operating at reduced capacity or with limited functionality, rather than experiencing a catastrophic crash.

By embedding these resilience practices into the foundation of agent design, organizations ensure that autonomous systems operate safely, consistently and predictably — even when unexpected failure conditions occur.

6. Security: Safeguarding agents and data

Autonomous agents operate across distributed networks, APIs and sensitive datasets — making security a foundational concern. AgentOps integrates security by design, ensuring that intelligence operates within trusted and protected boundaries.

Security in AgentOps emphasizes:

- Strict access control for tools: Implementing robust authentication and authorization protocols to ensure agents access only external tools and APIs for which they have explicit, least-privilege permissions, and that these accesses are logged and monitored.

- Sensitive data handling: Ensuring data privacy and regulatory compliance (e.g., GDPR, CCPA) throughout the agent’s operational lifecycle. This includes the secure handling of data at rest, in transit (through encrypted communications), and during processing, particularly when interacting with knowledge bases that contain proprietary or personally identifiable information (PII).

- Secure interaction patterns: Enforcing safe patterns for tool calls, preventing unauthorized actions and monitoring for suspicious usage.

- Audit and monitoring: Maintaining detailed logs and audit trails of agent interactions for forensic analysis and continuous security improvement. This allows for rapid detection of suspicious activities, effective forensic analysis in the event of a breach and continuous enhancement of the security posture.

By integrating security as a core design principle, AgentOps ensures that autonomous agents operate not only effectively but also safely — protecting valuable data, maintaining user trust and mitigating enterprise risks.

7. Versioning and reproducibility: Managing evolution with precision

Versioning and reproducibility provide organizations with control over how agents evolve and how past behaviors can be recreated for analysis, debugging, or compliance.

Core elements include:

- Holistic version management: Tracking versions of prompts, flows, and model choices.

- Traceability and rollback: Understanding dependency relationships between artifacts and being able to restore a previous stable configuration when needed.

- Controlled reproducibility: Capturing enough context (configuration, model, parameters and relevant logs) to understand why an agent behaved a certain way, even if exact token-level determinism is not guaranteed.

With structured versioning in place, organizations can iterate quickly while preserving accountability, stability and explainability.

Bringing it all together

These seven principles form the structural backbone of AgentOps, defining how autonomous agents should be observed, evaluated, governed, secured, and improved over time. Observability makes agent behavior transparent; evaluation brings measurable quality; governance and security create safe boundaries; feedback and resilience keep agents aligned and functioning under real-world uncertainty; and versioning provides the control needed to evolve systems without losing stability.

Together, these principles shift agentic AI from an experimental concept to a disciplined operational practice. By applying them consistently across the agent lifecycle — from orchestration to post-deployment oversight — organizations gain the clarity, trust and control required to run autonomous agents at scale. This ensures that as agents grow more capable and collaborative, they remain reliable, predictable and aligned with business intent.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

ZBrain Builder’s AgentOps capabilities: A comprehensive overview

ZBrain Builder extends AgentOps foundations with a robust suite of platform capabilities that enable enterprises to build, deploy, monitor, evaluate, govern and continuously improve autonomous agents and multiagent crews with precision. Below is a detailed breakdown of ZBrain Builder’s operational capabilities mapped to core AgentOps principles.

1. Agentic orchestration: Building autonomous agents and multiagent crews

ZBrain Builder provides a structured, enterprise-ready, low-code environment for building and deploying autonomous agents — whether you’re starting with ready-made capabilities, designing fully custom logic or orchestrating multiagent workflows. This capability focuses on how teams build, configure and operationalize agents in production.

Pre-built AI agents: Fast deployment via the agent store

ZBrain Builder’s Agent Store provides a library of ready-to-use agents built for common business tasks. Each agent includes preconfigured logic, tools, and workflows, allowing teams to deploy them quickly with minimal setup. Deployment simply involves naming the agent, setting access controls, defining input sources and triggers, and optionally sharing a public link or inviting operators. Because these agents are production-ready from day one, enterprises can validate use cases rapidly while maintaining full visibility through the ZBrain Builder interface.

Custom AI agents: Full control and tailored automation

For specialized use cases, ZBrain Builder enables teams to build custom AI agents with complete control over behavior, logic, inputs and integrations. Users can assemble workflows using Flows, integrate default or custom-built tools, connect external systems and define clear instructions that guide agent behavior. Sample inputs can be used during testing to refine behavior before the agent is deployed. Once configured, custom agents can be triggered manually or on a schedule, and their outputs can be routed to external destinations or chained to additional agents for downstream processing. This level of configurability enables enterprises to create agents that accurately reflect their unique operational and domain-specific requirements.

Agent crews: Structured orchestration for multiagent systems

For complex, multistep workflows, ZBrain Builder introduces Agent Crews — a hierarchical orchestration model where a supervisor agent coordinates multiple specialized child agents. Each agent in the crew can carry its own tools, prompts and integrations, while the supervisor governs task delegation, sequencing and validation. Crews can be designed using frameworks such as LangGraph, Google ADK, Microsoft Agent Framework or Semantic Kernel, giving teams the flexibility to choose orchestration patterns that match their automation needs. A visual canvas allows teams to map relationships, connect agents and define workflow logic, while the built-in test environment makes it easy to simulate how the crew collaborates before it is deployed. The result is a scalable, modular approach to agentic automation that breaks complex tasks into well-defined, role-based responsibilities.

2. Evaluation and monitoring: Ensuring continuous quality and reliability

As autonomous agents take on more complex, business-critical workflows, continuous oversight becomes essential. ZBrain Builder’s Monitor module provides this foundation by turning evaluation into an automated, always-on capability. Instead of relying on occasional manual checks, ZBrain Builder’s Monitor module captures input and output from agents, evaluates them against a defined set of metrics and surfaces performance trends in real time.

ZBrain Builder’s Monitor module supports four broad categories of metrics, giving enterprises a multidimensional view of agent quality:

-

LLM-based metrics such as response relevancy and faithfulness, which assess whether outputs are contextually appropriate and grounded in source data, critical for preventing hallucinations in RAG and conversational workflows.

-

Non-LLM metrics, including health checks, exact match, F1 score, Levenshtein similarity and ROUGE-L, offering deterministic validation for structured or expected outputs.

-

Performance metrics, centered on response latency, ensuring agents meet operational speed requirements — especially important in user-facing or real-time scenarios.

-

LLM-as-a-judge metrics such as creativity, helpfulness and clarity, which emulate human evaluation for qualitative or narrative tasks at scale.

Together, these metrics allow ZBrain Builder to detect quality drift, benchmark model performance and maintain consistent output standards across all deployed agents. The Monitor module consolidates monitoring events, logs, evaluation rules and notifications into a single operational layer, giving teams clear visibility into system health and the ability to respond quickly when failures or anomalies occur. Notification Flows let you automatically send alerts across your default (Gmail) or custom channels whenever an evaluation event requires attention. They help teams stay informed about failures, anomalies, or important changes in real time, enabling faster troubleshooting and helping them act promptly to resolve issues.

By embedding continuous evaluation into the AgentOps lifecycle, ZBrain Builder ensures that autonomous agents operate with measurable accuracy, reliability and business alignment at every stage of deployment.

3. Comprehensive performance dashboard for agent observability

A key element of AgentOps in ZBrain Builder is maintaining clear, continuous visibility into how agents behave once deployed. ZBrain Builder supports this through performance dashboards that translate execution activity into actionable metrics — covering utilization, efficiency, user satisfaction and cost. These dashboards help teams understand how agents perform in real workloads, enabling consistent operations and targeted optimization.

1. Centralized agent dashboard

ZBrain’s centralized Agents Dashboard provides a clear, unified operational view of agents, combining identity, type, accuracy, and action required into a single interface. This consolidated snapshot helps teams quickly assess which agents are performing reliably and which require attention due to stalled, pending or failed tasks. By surfacing only the most relevant indicators — such as completed work, last processed task timestamp and required actions — the Dashboard streamlines oversight and supports faster decision-making. It enables organizations to maintain continuity, monitor progress in real time and ensure that agents remain aligned with expected performance standards.

2. Comprehensive performance dashboards: Operational visibility for agents

ZBrain Builder enables performance evaluation of AI agents through built-in monitoring metrics that provide visibility into utilization, efficiency and cost. These metrics help teams understand how agents perform in real-world scenarios and identify opportunities for optimization.

Comprehensive metrics for agent performance

ZBrain Builder’s performance metrics provide a consolidated view of an agent’s operational behavior. These include:

-

Utilized time – Total duration the agent spent actively processing tasks, reflecting overall usage.

-

Average session time – The typical time required to complete a processing session, useful for identifying delays or throughput improvements.

-

Satisfaction score – Feedback-based quality measurement derived from user ratings within the Dashboard or chat interface.

-

Tokens used – The cumulative token consumption across sessions, directly tied to compute usage and cost.

-

Cost – The total monetary expense across sessions, helping teams track spend and manage budgets effectively.

These metrics allow teams to gauge performance trends, monitor reliability and maintain budget awareness at a glance.

Session-level insight for granular analysis

Beyond summary metrics, ZBrain Builder records the details of each processing session, giving full traceability into how individual tasks were executed. Each session record captures essential execution details, including session ID, record name, start and end date, session time, status, tokens used and cost. These fields provide a complete snapshot of individual task executions, helping teams trace performance, measure efficiency and correlate resource usage with outcomes.

4. Operational oversight in agent lifecycle: Task monitoring and queue management

ZBrain Builder provides clear, real-time visibility into how tasks progress through an agent’s workflow. Each item in the queue is marked with a color-coded status — green for completed tasks, yellow for tasks in progress and red for tasks that have failed due to processing issues.

The queue panel further allows tasks to be filtered by Processing, Pending, Completed or Failed, making it easy to isolate specific items and understand where delays or errors have occurred. These capabilities form a core part of operational oversight within AgentOps, helping teams maintain control and continuity throughout the agent lifecycle.

5. Multiagent crew inspection and performance assessment

ZBrain Builder extends its AgentOps capabilities to multiagent workflows through the Agent Crew framework, allowing enterprises to coordinate several specialized agents under a supervisor for structured, role-based execution.

The Crew Activity panel offers a chronological trace of every action taken by each sub-agent — capturing planning text, decisions and any tool or API calls. This step-by-step transparency helps teams validate workflow logic, confirm correct task handoffs and troubleshoot breakdowns in collaborative execution. Here’s what you can inspect:

-

Real-time reasoning text (“thinking”) for each step.

-

Actions selected by the crew or sub-agents.

-

Details of tool or API calls invoked during execution.

-

Chronological session logs for tracing workflow progression.

Complementing this, the Crew Performance Dashboard surfaces session-level metrics that quantify how the crew performs over time, making it possible to assess efficiency, consistency and resource usage across the full workflow.

This Crew Performance Dashboard supports these metrics:

-

Utilized time: Total active processing time across crew sessions.

-

Average session time: Typical duration required to complete tasks.

-

Satisfaction score: User-provided feedback aggregated across sessions.

-

Tokens used: Total token consumption for all model calls.

-

Cost: Estimated compute expense based on token usage.

-

Session details: Session ID, record name, and timestamps.

Together, these capabilities support mature AgentOps practices such as execution auditability, behavioral traceability and continuous monitoring of multiagent systems.

6. Agent and crew testing: Validating logic before deployment

ZBrain Builder provides built-in testing tools that let teams validate how agents and multiagent crews behave before moving into production. For agents, users can trigger test executions directly from the Agents Dashboard to preview how the agent processes inputs, runs its defined steps and interacts with tools — allowing quick refinement of prompts, instructions or flow logic.

For multiagent crews, in the create crew structure step “Test Crew” panel simulates a full run across the supervisor and child agents, showing delegation flow, intermediate responses and final output in real time. These controlled test runs surface reasoning traces, tool calls and execution order exactly as configured, enabling teams to verify behavior, adjust agent roles and ensure workflow correctness prior to deployment. This capability helps teams confirm structural and logical soundness without touching live systems.

7. Security, governance and controlled agent execution

A core dimension of AgentOps in ZBrain Builder is ensuring that every autonomous agent operates within a secure, transparent and auditable environment. ZBrain Builder embeds governance throughout the agent lifecycle, giving enterprises full oversight into what agents do, how they execute tasks and whether their behavior aligns with internal policies and compliance requirements.

Enhanced execution oversight with full visibility into agent activity

ZBrain Builder provides deep operational visibility into how agents behave — from task intake to step-by-step execution — ensuring organizations can govern agent operations with precision. Through the Agents Dashboard, teams can instantly access key operational metadata such as agent type, task history and last execution timestamps.

Within each agent’s activity interface, ZBrain Builder records and presents:

-

Execution logs: Chronological records of each step performed.

-

Input/output traces: Full visibility into processed data and generated results.

-

Token and cost transparency: Per-model consumption metrics for budget control.

-

Task queue monitoring: Real-time view of pending, processing, completed or failed tasks.

-

Task deletion controls: Safe removal of stuck or invalid tasks via confirmation prompts.

This granular, end-to-end observability allows teams to monitor, investigate and optimize agent behavior — forming a foundational governance mechanism for enterprise AI.

Granular access governance

Security begins with access control. ZBrain Builder applies RBAC and enterprise identity provider integration (OAuth,) to regulate who can create, edit, deploy, operate and monitor agents, ensuring that only authorized personnel can modify agent logic, connect data sources or manage production tasks.

Compliance and security standards

ZBrain’s ISO 27001:2022 and SOC 2 Type II certifications ensure that all agent workflows operate within audited, industry-standard controls for security, availability and confidentiality.

ZBrain Builder’s AgentOps capabilities operate on top of the platform’s enterprise-grade security foundation — including RBAC, least-privilege principles, encryption, tenant isolation, compliance certifications, secure data handling and storage, and vulnerability management — ensuring that all agent activity, logs and workflows are protected by consistent, end-to-end security and governance controls.

8. Human-in-the-loop feedback and task refinement

ZBrain Builder enables users to review and refine agent outputs directly from the agent dashboard. When a task result does not meet expectations, users can submit structured feedback through the built-in feedback panel, selecting reasons such as “Not factually correct,” “Not following company’s guidelines,” or “Response not updated,” or optionally adding specific comments.

Users can highlight areas for improvement. This feedback helps ZBrain agents identify recurring problems and refine future outputs. The platform additionally supports human approvals and feedback capture, allowing agents to send alerts and pause for manual review when needed — a useful capability for agentic workflows that require manual intervention at specific steps.

9. Version management and controlled reproducibility

ZBrain Builder strengthens AgentOps by offering robust version control, enabling teams to track, manage, and restore earlier versions of prompts and flows with precision. This ensures agents behave consistently across iterations and enables teams to reproduce past results when investigating issues or validating changes.

Prompt versioning for safe experimentation

ZBrain Builder’s prompt editor maintains a chronological version history for each prompt, including timestamps and the user who made the change. Users can view older configurations, compare changes and restore a previous version when needed. Also, from the prompt overview page, they can compare which prompt has worked best by evaluating outcomes. Teams can also compare how different prompts performed by reviewing evaluation scores, token usage, and associated cost, making it easier to identify the most effective and efficient prompt variations. Restoring automatically creates a new entry in history, ensuring a clear audit trail. This provides controlled iteration of the instructions agents rely on, while preserving the ability to revert to known-good behavior.

Flow versioning

ZBrain Builder’s versioning feature for Flows ensures that agents continue running reliably even as workflows evolve. Published Flows remain locked as the live, production version, while any edits automatically generate a draft that can be adjusted without disrupting active operations. Teams can review past published versions at any time, create new drafts from earlier configurations, and clearly differentiate draft and live versions through built-in visual indicators. This controlled approach not only supports safe refinement but also enables rollback to a previously stable configuration when needed, helping organizations maintain the stability and predictability required in enterprise environments.

Streamline your operational workflows with ZBrain AI agents designed to address enterprise challenges.

AgentOps best practices

AgentOps is not only about observability and monitoring — it’s about creating a culture of safe experimentation, controlled rollout, cost awareness and continuous improvement. The practices below combine platform-enabled capabilities with broader organizational habits that keep agents stable as they scale from prototype to production.

Start small and iterate

Begin with a single agent or a limited set of workflows before expanding. Use early deployments to refine evaluation metrics, validate monitoring rules, tune thresholds and understand recurring failure patterns. These early learnings minimize risk and establish a stable operational baseline.

Automate alerts and policy enforcement

Configure real-time notifications so teams are immediately informed when evaluations fail, latency spikes appear or unexpected tool calls or cost overruns occur. Automated alerts and escalation rules shorten time to intervention and prevent small irregularities from becoming operational incidents.

Use modular, well-scoped agent logic

Design agents and multiagent crews with narrow, clearly defined responsibilities. Smaller steps make execution traces easier to interpret, improve predictability and reduce troubleshooting complexity. ZBrain Builder’s modular Flows and structured sub-agent roles naturally support this clarity and composability.

Minimize data exposure and protect sensitive inputs

Pass only the information a step truly requires. Avoid including full documents, unnecessary PII or secrets in prompts or logs. Enforce retention windows, provenance tracking and consent rules across workflows to reduce operational risk and maintain compliance.

Maintain strict change control with versioning

Treat prompts, flows and configurations as versioned artifacts. Use version history to compare performance shifts, audit changes and restore earlier configurations safely. ZBrain Builder’s built-in versioning ensures rollbacks and reproducibility without disrupting active deployments.

Adopt safe rollout patterns

Gradual deployment reduces risk for autonomous systems. Test in sandbox environments, run agents in shadow mode alongside human workflows, then move to small canary groups with rate limits before full release. Keep rollback options ready and validate behavior through logs and evaluation gates at every stage.

Monitor and optimize cost proactively

Track token usage, API consumption and session-level cost trends. Review budgets frequently and configure alerts for unexpected spending. Optimize prompts, revise workflows or adjust model choices when costs increase — ZBrain Builder’s token and cost visibility make this management straightforward.

Encourage cross-functional collaboration

AgentOps requires input from engineering, data science, operations, security and compliance teams. Shared visibility across dashboards, activity logs and monitoring events helps resolve issues earlier and ensures agents operate within organizational constraints.

Align AgentOps goals with business KPIs

Tie each agent’s purpose to measurable outcomes such as reduced processing time, improved satisfaction scores, lower cost per task or fewer escalations. ZBrain Builder’s performance dashboards and comprehensive metrics make these KPIs trackable and actionable.

Establish ownership and accountability

Assign responsible owners for agent logic, operational monitoring, security oversight and cost governance. Role-based permissions ensure the right people have access, reduce misconfiguration risk and maintain auditability.

Conduct structured reviews after failures

When failures occur, use activity traces, monitor logs and version history to analyze root causes. Document findings and adjust evaluation rules, thresholds or agent logic to prevent recurrence. These structured reviews continuously strengthen both the agent and the operational process around it.

By combining disciplined operational practices with the observability, evaluation and governance capabilities built into ZBrain Builder, organizations can run autonomous agents with confidence. These best practices create a structured environment where agents improve safely over time, remain aligned with business goals and deliver consistent, accountable performance at scale.

AgentOps challenges and future trends

AgentOps unlocks a powerful new way to operate AI systems, but it also introduces a set of hard, still-evolving problems. Many of the tools, processes and models borrowed from DevOps and MLOps don’t fully fit when agents are autonomous, stochastic and constantly interacting with tools, data and each other. Understanding the challenges and where the ecosystem is heading is key to building a realistic roadmap for AgentOps inside any organization.

Key challenges in AgentOps today

Complexity of agent design and orchestration

Modern agentic systems rarely consist of a single bot answering a single query. They often involve multiple agents, each with its own role, tools, memory scope and coordination rules. As soon as you introduce multistep workflows or multiagent crews, you have to manage:

- Dependencies between steps and agents

- Ordering and termination conditions

- Conflict resolution when agents disagree

- Emergent behaviors that were never explicitly designed

Keeping that orchestration understandable, debuggable and change-friendly is one of the hardest problems in AgentOps today.

Observability at scale

Agentic systems generate vastly more signals than traditional applications — prompts, intermediate reasoning, tool calls, retrieval traces, evaluations and user feedback, often spanning dozens of steps per session. This creates two immediate hurdles:

- Volume: Capturing and storing all relevant traces quickly becomes expensive and operationally heavy at scale.

- Coverage: Traditional logs and metrics miss the context that matters. Effective diagnosis requires visibility into reasoning paths, tool-call context, retrieved knowledge and evaluation outcomes.

The challenge is building observability that is detailed enough to explain agent behavior yet efficient enough to operate at enterprise scale — a balance that remains difficult for most organizations deploying autonomous agents.

Anomaly detection and root-cause analysis

In agentic systems, failures rarely have a single source. A poor response can stem from infrastructure issues, model behavior or orchestration logic, making diagnosis inherently multilayered. The same symptom might be caused by:

- System-level faults: API outages, latency spikes or dependency failures

- Model-level issues: hallucinations, reasoning errors or context misalignment

- Orchestration-level flaws: prompt design gaps, faulty planning or tool-call mistakes

Today, identifying anomalies across these layers — and determining which one actually caused the failure — still requires significant manual inspection of traces, logs and reasoning steps. Although research is advancing toward unified anomaly detection pipelines and automated Root Cause Analysis (RCA) engines for agentic workflows, achieving reliable, end-to-end root-cause attribution at scale remains an unsolved challenge.

Testing, validation and verification

Agentic systems break many of the assumptions traditional testing relies on. They’re nondeterministic, depend on external tools and evolving context, and can exhibit emergent behavior — especially in multiagent workflows. This makes conventional unit and integration tests insufficient. For example:

- The same input can lead to different outputs.

- Behavior depends on external tools, data freshness and long-running context.

- Multiagent workflows can produce emergent behaviors that were never specified in tests.

Teams need new testing strategies — such as scenario suites, golden tasks, replay-based validation and continuous evaluation — to gain confidence without expecting strict determinism. Formal verification remains extremely challenging outside narrow, safety-critical domains.

Trust, explainability and governance

As agents take on higher-stakes decisions, simply “working most of the time” is not enough. Teams must be able to answer:

- Why did the agent choose this plan, tool or answer?

- Which data sources or knowledge bases influenced the decision?

- Which guardrails fired — or should have fired — but didn’t?

Without explainability and clear governance, it is hard to meet regulatory expectations, pass audits or convince risk, legal and compliance teams that agentic automation is safe to deploy at scale.

Security and robustness

Agentic systems introduce new security challenges because they autonomously reason, access tools, modify state and interact with external systems. This widens the attack surface:

- Prompt injection and tool misuse attacks

- Data exfiltration through tools, retrieval systems or unconstrained outputs

- Poisoned knowledge bases or tampered context

- Malicious or misconfigured external connectors

Since agents can independently invoke tools that perform real operations, a single unguarded step can escalate into a serious incident. Securing agentic workflows requires a blend of traditional application security, model-aware guardrails, strict tool-access controls and continuous monitoring of agent behavior to ensure every action remains safe, constrained and auditable.

Scalability, performance and resource management

Agents can be computationally heavy: long context windows, many tool calls, multiple model invocations per task and multiagent topologies all drive up latency and cost. At fleet scale, organizations must solve for:

- Intelligent routing and model selection

- Scheduling across CPU/GPU resources

- Cost guardrails and budget enforcement

- Throughput without runaway queues or degraded UX

Doing this efficiently — while still preserving rich observability and evaluation — remains a practical bottleneck for many teams.

Integration, data governance and lifecycle management

Most enterprises don’t start with a clean slate. Agents must:

- Integrate with legacy systems, proprietary APIs and heterogeneous data stores

- Respect existing IAM, data classification and retention policies

- Work with evolving schemas, changing source systems and shifting business rules

Meanwhile, the data that powers agents (training sets, retrieval corpora, logs and feedback) has its own lifecycle and governance requirements. Maintaining lineage, consent, and retention control while still moving quickly is a nontrivial AgentOps challenge.

Talent, process and standardization gaps

AgentOps sits at the intersection of ML, software engineering, DevOps, security and product. Very few organizations have all these capabilities in one place, and there are:

- Limited best-practice playbooks for multiagent systems

- Few widely adopted standards for agent interfaces, traces or policies

- Emerging but immature patterns for roles and responsibilities

As a result, many teams reinvent their own AgentOps stack and conventions, which slows down learning and interoperability.

Emerging trends and future directions in AgentOps

Despite current challenges, the AgentOps landscape is evolving quickly. Several clear trends are shaping its future trajectory.

Adaptive learning and policy enforcement

AgentOps is expected to move from static guardrails to adaptive policies that automatically pause, retrain or reroute agents when they exceed safety, cost or failure thresholds. Systems may learn from historical patterns to apply the right controls at the right time.

Self-healing agent systems

Future AgentOps tools may increasingly support self-stabilizing behavior, including:

- Rerouting tasks to backup agents when primary workflows degrade

- Automatically adjusting parameters or model choices based on performance

- Triggering fallbacks or rollback strategies without human intervention

Humans define the boundaries; the system handles operational recovery.

Collaboration metrics in multiagent systems

As multiagent workflows become more common, operational visibility will expand into inter-agent coordination, including:

- Task delegation efficiency

- Communication breakdowns

- Human intervention patterns

- Supervisor–worker alignment

The focus will shift from measuring performance alone to measuring the quality of collaboration.

Stronger human–agent collaboration patterns

Most real-world systems are transitioning toward shared control rather than full autonomy. This includes:

- Human-in-the-loop checkpoints for high-risk actions

- Feedback loops where operator comments refine future behavior of agents

- UI signals that communicate agent confidence, uncertainty or pending actions

Future AgentOps may track not only agent performance but how humans and agents interact, intervene and co-manage tasks.

Industry-specific AgentOps frameworks

Highly regulated sectors will require domain-tailored operational foundations, including:

- Predefined guardrails for healthcare, finance, public sector, manufacturing and other sectors

- Compliance-aligned evaluation suites and monitoring rules

- Domain-specific audit trails and risk-scoring models

AgentOps is likely to mature into industry-specific operational playbooks.

External-agent interoperability and collaborative ecosystems

A major trend is the emergence of interoperable agent ecosystems where:

- Internal agents coordinate with external or partner agents

- Shared tools and context support cross-organizational workflows

- Standardized communication protocols enable negotiation and cooperation

Agents are evolving from isolated components into collaborative digital teams.

Advanced security and threat intelligence

Security will become deeply integrated into agent execution. As autonomy grows, organizations will likely adopt:

- Continuous oversight of data flow, retrieval sources and connector interactions

- Real-time detection of suspicious patterns such as prompt injection or tool misuse

- Automated containment mechanisms that pause, isolate or block unsafe behavior

Over time, AgentOps may blend traditional application security with model-aware threat detection for more holistic safety.

Endnote

Agentic AI is rapidly becoming a foundational enterprise capability, but its real value is unlocked only when autonomy is paired with discipline, transparency and control. AgentOps provides that foundation — transforming dynamic, tool-based agents into reliable, auditable systems that organizations can deploy with confidence. By unifying observability, evaluation, governance and security, AgentOps elevates autonomous agents from experimental prototypes to production-ready operational partners.

As AgentOps adoption accelerates, the organizations that lead will be those that invest early in this mature operational framework. It transforms agents from experimental workflows into dependable enterprise systems. ZBrain Builder brings these principles to life with end-to-end tooling that enables teams to design, deploy, monitor, and refine agents and multiagent systems with clarity and control. Organizations that invest early in AgentOps will be the ones that turn autonomy into a strategic advantage — where agents become trusted partners in scaling impact, efficiency and innovation.

Ready to accelerate your AgentOps journey? Discover how ZBrain can help you build, deploy, and manage autonomous AI agents with confidence and control.

Listen to the article

Author’s Bio

An early adopter of emerging technologies, Akash leads innovation in AI, driving transformative solutions that enhance business operations. With his entrepreneurial spirit, technical acumen and passion for AI, Akash continues to explore new horizons, empowering businesses with solutions that enable seamless automation, intelligent decision-making, and next-generation digital experiences.

Frequently Asked Questions

What exactly is AgentOps, and why does it matter now?

AgentOps is the emerging discipline that governs how autonomous AI agents are operated, monitored and improved in real-world environments. It goes beyond traditional DevOps and MLOps by addressing the unique challenges of agents that reason independently, call external tools, adapt to context and act across enterprise systems. As organizations transition from simple chatbots to fully agentic workflows, the need for structured oversight becomes critical. AgentOps ensures these agentic workflows remain reliable, safe, compliant and cost-efficient as they take on increasingly important business functions.

How is AgentOps different from DevOps, MLOps or LLMOps?

DevOps, MLOps and LLMOps each solve important but distinct operational challenges. AgentOps sits above them, addressing the unique behavior of autonomous agents.

-

DevOps: Focuses on reliable software delivery, CI/CD pipelines, infrastructure automation and system uptime. It assumes deterministic applications whose behavior is predictable once deployed.

-

MLOps: Manages the lifecycle of machine learning models — data pipelines, training, deployment, monitoring and model drift. It ensures models perform consistently but does not account for dynamic decision-making.

-

LLMOps: Specializes in large language model operations, including prompt management, inference optimization, latency monitoring and cost control. It focuses on model-level performance, not autonomous reasoning or tool orchestration.

-

AgentOps: Addresses an entirely different operational reality: autonomous agents that reason, plan and act across business systems. Agents interact with external tools, operate over long-running contexts and memories, collaborate with other agents, and produce nondeterministic behavior.

Traditional DevOps/MLOps/LLMOps workflows cannot capture reasoning traces, tool-call logic or multistep decision pathways. AgentOps introduces these capabilities — providing behavioral observability, guardrails, evaluation and governance necessary to run agents safely and predictably in production.

What are the core pillars of AgentOps?

The discipline is grounded in several foundational pillars:

-

Observability — Capturing reasoning traces, tool calls, telemetry, context flow and end-to-end execution.

-

Evaluation — Measuring output quality, faithfulness, latency, hallucination risks and task-level performance.

-

Governance — Enforcing guardrails, policy compliance, auditability and safe execution boundaries.

-

Feedback — Incorporating user signals, approvals, and corrections for continuous improvement.

-

Resilience — Handling failures gracefully through retries, fallbacks and degradation strategies.

-

Security — Managing access controls, tool permissions, data protection and detecting unsafe behavior.

-

Versioning — Tracking prompts, flows, models and parameters to ensure reproducibility and safe iteration.

These pillars ensure agentic systems remain predictable, explainable and safe — despite their adaptive, nondeterministic nature.

What kinds of use cases benefit most from AgentOps?

AgentOps delivers the highest value in workflows where accuracy, compliance and cost discipline are essential. Customer support, financial analysis, FinOps automation, security and threat investigation workflows, R&D or legal knowledge copilots, and back-office automation all benefit significantly from structured oversight.

Multiagent systems coordinating complex business processes also rely heavily on AgentOps to monitor their interactions and maintain consistent, well-governed behavior. Any enterprise workflow where output quality, operational consistency or regulatory alignment matters stands to gain from a strong AgentOps foundation.

How does ZBrain Builder support AgentOps in practice?

ZBrain Builder serves as a unified environment for building, deploying, and orchestrating autonomous agents, giving enterprises the visibility, evaluation, and governance needed to operate agentic systems reliably at scale. It supports custom agents, pre-built agents and complex multi-agent crews while providing deep insight into behavior, performance, and cost.

ZBrain Builder enables AgentOps through:

-

End-to-end orchestration: Low-code workflows for agents and coordinated multi-agent crews, with supervised delegation, tool integrations, and structured execution paths.

-

Comprehensive monitoring dashboards: Real-time visibility into utilization, latency, satisfaction scores, token usage, session costs, and task health.

-

Session-level traceability: Step-by-step traces of reasoning, tool calls, inputs, outputs, and execution order—making agent behavior fully transparent and diagnosable.

-

Rich evaluation frameworks: A comprehensive set of metrics, including LLM-based metrics, non-LLM metrics, performance metrics, and LLM-as-a-judge assessments to measure output quality at scale.

-

Operational oversight via task queues: Clear views of processing, pending, completed, and failed tasks to maintain workflow continuity and quickly identify issues.

-

Enterprise-grade security and governance: RBAC, OAuth, least-privilege tool access, audit logs, and compliance-aligned controls that ensure safe, accountable execution.

-

Versioning and safe iteration: Built-in version history for prompts and flows, enabling rollback, controlled experimentation, and reliable evolution of agent logic.

Together, these capabilities give organizations the visibility, control, and safeguards needed to operate autonomous agents confidently in production.

What metrics does ZBrain Builder use to evaluate and monitor agent performance?

ZBrain Builder’s Monitor module evaluates agents using four core categories of metrics, giving enterprises a structured and multi-dimensional view of output quality and operational reliability:

-

LLM-based metrics — Measures such as relevancy and faithfulness that assess whether responses are contextually accurate and aligned with retrieved or provided data.

-

Non-LLM metrics — Deterministic checks including exact match, F1 score, ROUGE-L, Levenshtein similarity, and required health checks.

-

Performance metrics — Primarily response latency, capturing the total time taken by the LLM to return a response after receiving a query.

-

LLM-as-a-judge metrics — Qualitative assessments (e.g., clarity, helpfulness, creativity) that emulate human judgment at scale.

In addition to the Monitor module’s metrics, ZBrain’s Performance Dashboards provide operational analytics that help teams evaluate efficiency and cost:

-

Utilized time, average session time, and task duration trends to measure throughput and operational stability.

-

Satisfaction scores to understand perceived quality and highlight recurring issues.

-

Token usage and cost per session for proactive budget management and cost optimization.

-

Session-level execution details—including status and timestamps for deep traceability, debugging, and continuous improvement.

How does ZBrain Builder handle security and compliance for AgentOps?

ZBrain Builder is designed with enterprise-grade security at its core. It supports role-based access control with OAuth integration, applies least-privilege principles for tool and system access and ensures full tenant isolation and encryption for data protection. The platform adheres to ISO 27001:2022 and SOC 2 Type II–aligned controls, providing governance over availability, confidentiality and integrity.

Detailed audit logs capture agent actions, tool interactions and data flow, ensuring transparency and reviewability — an essential requirement for most industries.

How can organizations measure the ROI or success of their AgentOps initiatives?

Organizations measure AgentOps ROI by evaluating both operational improvements and strategic outcomes. A strong AgentOps foundation typically:

-

Reduces the time required to complete tasks

-

Lowers human intervention

-

Decreases failure rates and rework

-

Enhances compliance by enforcing policy-aligned behavior

-

Optimizes token and API usage

-

Improves decision quality by reducing hallucinations and policy violations

Because ZBrain Builder captures detailed performance, cost and quality metrics, enterprises can directly map agent behavior to KPIs such as efficiency, accuracy, compliance adherence and cost per task — making the business impact of AgentOps clear and quantifiable.

How do we get started with ZBrain™ for AI agent development?

Getting started with ZBrain for AI agent development is simple. You can contact the team at hello@zbrain.ai or submit an inquiry through http://zbrain.ai . Once connected, ZBrain’s experts review your current workflows, data landscape, and integration environment to understand your readiness and identify high-impact opportunities. From there, the team designs a tailored pilot—defining use cases, selecting the right agent architectures, and outlining the orchestration, monitoring, and governance model needed to deploy production-grade AI agents aligned with your operational and strategic goals.

Insights

A guide to intranet search engine

Effective intranet search is a cornerstone of the modern digital workplace, enabling employees to find trusted information quickly and work with greater confidence.

Enterprise knowledge management guide

Enterprise knowledge management enables organizations to capture, organize, and activate knowledge across systems, teams, and workflows—ensuring the right information reaches the right people at the right time.

Company knowledge base: Why it matters and how it is evolving

A centralized company knowledge base is no longer a “nice-to-have” – it’s essential infrastructure. A knowledge base serves as a single source of truth: a unified repository where documentation, FAQs, manuals, project notes, institutional knowledge, and expert insights can reside and be easily accessed.

How agentic AI and intelligent ITSM are redefining IT operations management

Agentic AI marks the next major evolution in enterprise automation, moving beyond systems that merely respond to commands toward AI that can perceive, reason, act and improve autonomously.

What is an enterprise search engine? A guide to AI-powered information access

An enterprise search engine is a specialized software that enables users to securely search and retrieve information from across an organization’s internal data sources and systems.

Adaptive RAG in ZBrain: Architecting intelligent, context-aware retrieval for agentic AI

Adaptive Retrieval-Augmented Generation refers to a class of techniques and systems that dynamically decide whether or not to retrieve external information for a given query.

How ZBrain breaks the trade-offs in the AI iron triangle

ZBrain’s architecture directly challenges the conventional AI trade-off model—the notion that enhancing one aspect inevitably compromises another.

ZBrain Builder’s AI adaptive stack: Built to evolve intelligent systems with accuracy and scale

ZBrain Builder’s AI adaptive stack provides the foundation for a modular, intelligent infrastructure that empowers enterprises to evolve, integrate, and scale AI with confidence.

Automated AI workflows with ZBrain: Flows, LLM agents and orchestration patterns

ZBrain enables enterprises to design workflows that are intuitive for teams, efficient in execution, and adaptable to evolving business needs—transforming automation into a strategic advantage.